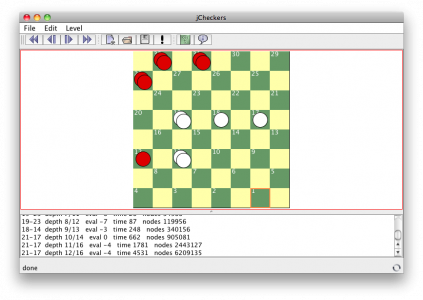

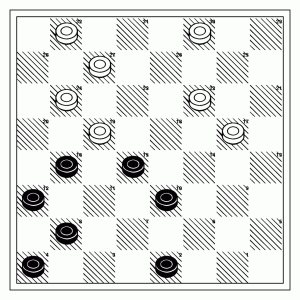

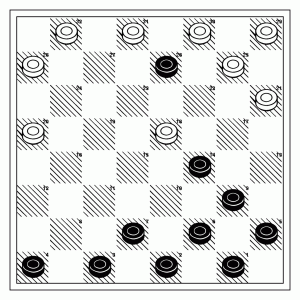

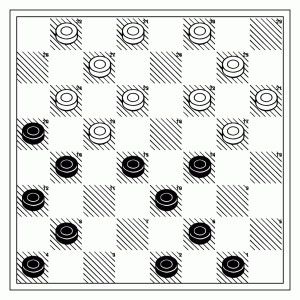

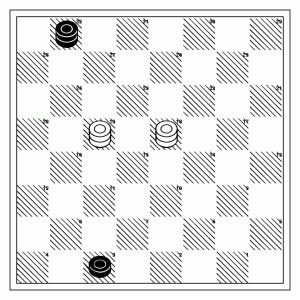

I’m still somewhat baffled by the performance of my checkers program. I keep pondering that perhaps it would be a good idea to take all the lessons I’ve learned, burn it down, and try again with Milhouse2. After all, in the words of Tom Duff (who may not have said this, but should have) “any program worth writing is worth rewriting”. Toward that end, I was reconsidering the data representation that I used, and revisited this nifty page on Bitboards:

I basically use bitboards already, but at the very end of the page, you’ll see mention of a novel if somewhat unobvious representation credited to Thotsaphon Thanatipanonda, which simplifies move and jump generation. My own code for this was laboriously constructed using a python program, so it would be nice if we could shrink that code a bit and make it more straightforward. One key that Ed mentions is that it requires machine language rotate instructions, since C does not have a rotate instruction. I thought I’d try to see what gcc does with the obvious implementation. So, I quickly wrote the following function:

inline uint32_t

rol7(uint32_t val)

{

return (val << 7) | (val >> 25) ;

}

(This function uses uint32_t to make sure we have 32 bit values. You can find the defines in stdint.h.) As perhaps we should expect, gcc is smart enough to turn this into a single machine language rorl. This means that we can write a move generator without any difference in handling the even or odd rows, which currently doubles at least the size of my own move generator. Pretty nifty.