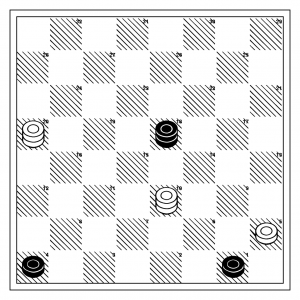

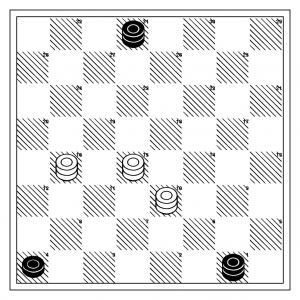

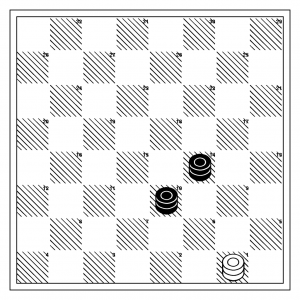

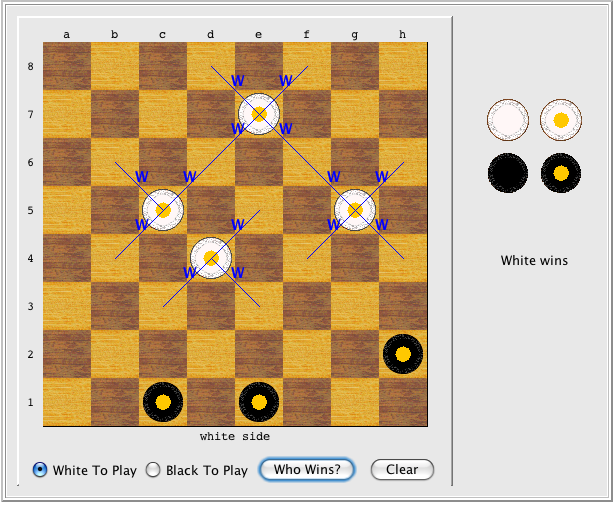

Oddly Chinook seemed to know the end was coming before Milhouse recognized it. But sure enough, Milhouse managed to navigate itself to a victory.

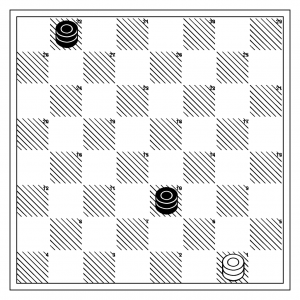

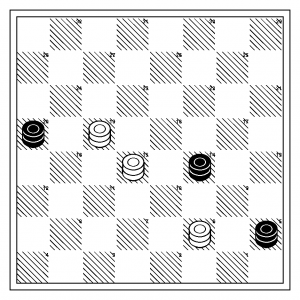

[Event "Sparring Match"] [Date "2009-04-27"] [Black "Milhouse"] [White "Chinook (Amateur)"] [Site "Internet"] [Result "1-0"] 1. 12-16 22-17 2. 16-19 24x15 3. 11x18 23x14 4. 9x18 28-24 5. 8-11 26-23 6. 6-9 23x14 7. 9x18 24-19 8. 2-6 17-14 9. 10x17 21x14 10. 4-8 31-26 11. 11-15 19x10 12. 6x15 25-21 13. 8-11 29-25 14. 1-6 26-22 15. 6-9 21-17 16. 9-13 27-23 17. 18x27 32x23 18. 15-19 23x16 19. 11x20 30-26 20. 20-24 25-21 21. 7-10 14x7 22. 3x10 26-23 23. 24-27 23-19 24. 27-31 19-16 25. 31-26 16-11 26. 10-15 11-8 27. 26-23 8-3 28. 23-18 3-8 29. 18x25 17-14 30. 25-22 14-10 31. 22-18 8-11 32. 15-19 10-7 33. 13-17 21x14 34. 18x9 11-15 35. 19-23 7-2 36. 23-26 15-10 1-0