I’m going to be nostalgic for a few moments. If you are too young to have any sense of nostalgia, skip ahead to the bold text below. You were warned!

A few days ago, I mentioned that I was having a bit of flashback, thinking of my first experience with time sharing computers back in the early 1980s. I was a child of the microcomputer revolution: I remember reading the articles on the Altaire and the COSMAC ELF in Popular Electronics (I was a precocious 12 year old at the time) and would eventually buy my first computer (an Atari 400) in 1980. By 1982, I was attending the University of Oregon and got my first exposure to “big” timesharing computers: their DEC 1091 (running TOPS10) and their IBM 4341.

I must admit, I wasn’t all that impressed at the time. I did take FORTRAN and COBOL classes though. I actually did JCL and punch cards on the IBM. I worked in the documents room at the Computing Center for a while, and I recall that I made some small modification to their software (in FORTRAN on the DEC) that kept track of check ins and check outs. It may have been the first time I was ever paid to program.

My recollection is that by late in my second year, I was obsessed with the idea of getting an account on one of the three VAX 11/750s in the computer science department, all of which ran BSD. To do that, I needed to start taking graduate level classes. So, I applied for them. I recall sitting in Professor Ginnie Lo’s compiler class on the first day. She asked me if I was a graduate student, and I replied “no”. She then asked me if she had given me permission to take her course. I replied (somewhat nervously, as I recall) “no”. To her credit, she allowed me to stay.

I digress.

Anyway, after getting accounts on one of the department’s Unix machines (and having access to another via my job in the Chemistry department) I found little reason to continue using the DEC 1091. I doubt that I used it more than a couple of times after 1985.

Which, in retrospect, is kind of too bad. The 36-bit DEC architectures were a significant part of computing history. The PDP-10 had a pretty nice architecture, with an interesting, symmetric instruction set. I didn’t have any appreciation of it back then, but with the wisdom granted by experience (and no doubt a certain amount of nostalgia) I find that it is actually pretty interesting.

So… I embarked on a mini-project to recreate the environment that I dimly remember.

Luckily, you don’t need a physical PDP10: there are emulators that do an excellent job of recreating a virtual PDP-10 for you to program. I am using the simh emulator which is actually a suite of programs for a wide variety of early machines. The latest version of TOPS-10 was 7.04, but in experimenting with it, I found that I had difficulty making their simulated “printer” device work properly. Besides, v7.04 was actually a bit later than when I used the DEC. So, I’ve instead gotten version 7.03 up and running (which dates to around 1986).

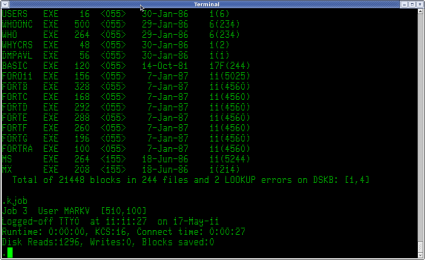

Using the online documentation from various sources, I got the installation tapes up and running, and created a simulated system with a single RP06 disk drive. The printer is emulated just as a text file: I was thinking of creating a gadget to store them in individual files which we could view from a web page. I don’t recall anything of our networking with the 1091 (I suspect we had DECNET at the time) but TOPS-10 didn’t support TCP/IP anyway, so networking wasn’t a priority. I’ve installed BASIC and FORTRAN, as well as the TOPS-10 KERMIT tape. Of course you can program with MACRO-10 as well. I’ve got their DECMAIL system installed, but it has difficulty working without DECNET installed, and seems to require a patch. I’ll try to get that working shortly.

Eventually, I’ll get some games like ADVENT online. I created a single user account, but don’t remember some of the finesse points of creating project defaults and SWITCH.INI files. I also seem to have forgotten the logic behind SETSRC and the like, but it will come to me. I never was a TOPS-10 operator, so most of the systems level stuff is new to me (but luckily fairly well documented). For now, I can login, run BASIC programs, edit, compile and run FORTRAN and simple MACRO-10 programs, and… well… logout.

Okay, why do this?

I’m not actually one of those guys who is overly nostalgic. I stare at my iPhone, and I am amazed. I don’t want to trade it for a PDP-10. But I also think that the history of technology is fascinating, and through emulation, we can gain insight into the evolution of our modern computers by actually playing with the machines of the past.

Here’s one obvious insight: this was a time sharing operating system. It seems in many ways the bulk of the system was actually designed to do accounting on the amount of various things that you did. There is all sorts of mechanism for tracking how much CPU you used, how many disk reads and writes, how many cards you punched, and how many pages of line printer output you sent. There was a well defined batch queueing system, so that you could use every bit of available CPU to maximum efficiency. It imagined that there would always be a trained operator on duty to service the machine. In that sense, it is completely the opposite of what we saw developing from the personal computer world: when CPUs became cheap enough, it was obvious that you’d just have one, which you could do as you see fit. If it sat idle, well, it was your computer. But those early personal operating systems didn’t really have any support for multiprocessing or memory protection. It took quite some time for that to trickle into the personal computing world.

It’s also interesting to compare it to an operating system like ITS (which you can also run in emulation), which was developed in a purely academic environment. All of the accounting stuff I mentioned above are completely absent in ITS: the ITS system was designed to basically present a virtual PDP-10 to everyone who was connected to it. There was very little to protect you from mistakes that either you or someone else could make. The command interface was just DDT: the PDP-10 machine language debugger. ITS was an important step in the evolution of computer operating systems.

You can learn about these things by reading books about them, but playing with them will undoubtedly give you some insights that you can’t get by books alone.

Is anyone else interested in this stuff? I’m considering making a virtual PDP-10 available to the public for mucking around with, and maybe even typing up some short notes on how to do common tasks. I’ll probably also try to get a version of ITS running in the same way.

I’ve thought about maybe trying to scrounge some old VT100s (maybe four of them), wire them up to a modern small computer running simh, and creating a virtual system that you could bring to appropriate conferences like the Maker Faire. Kind of a “Computing Time Machine”.

I’ll think about it some more. If anyone else has similar interests (or could mentor me on the ways of 36 bit computers), drop me an email or leave me a comment below.