In thinking about the 555 timer AM transmitter that I constructed last night and trying to understand how it might work, I eventually ended up with a basic question about PWM modulation. It boiled down to this: if you are generating a pulse width modulation signal with a rate of (say 540khz) but pulses whose duty cycle varies from 0 to 100%, how does this implement AM modulation?

If we consider the rectangular pulse centered inside an interval of running from -1 to 1, then a pulse with a duty cycle of D percent runs from -D/100 to +D/100. (From now on, we’ll find it convenient to express D as a fraction and not as a percent). We can use Fourier analysis to decompose this square wave into a series of sines and cosines at multiples of the base rate. For our application, we can ignore the DC component (it’ll be trimmed off by a DC blocking cap anyway) and we can assume that all higher multiples of the carrier frequency will be low pass filtered. The only thing we really need to look at is the component right at the carrier frequency.

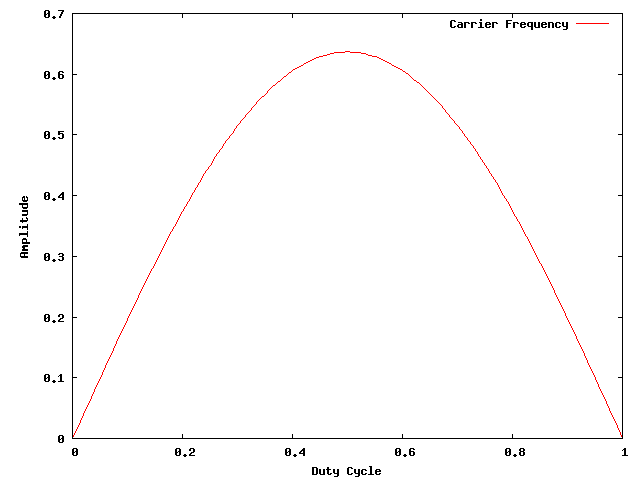

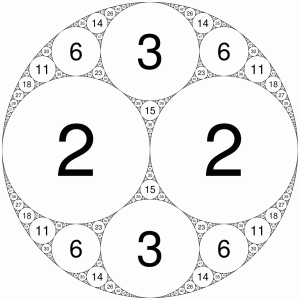

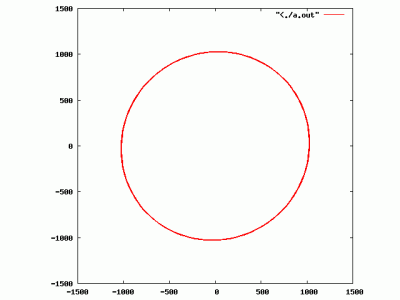

We can do this analytically without too much trouble. To compute the Fourier coefficient, we compute 1/L * integral(cos(n * pi * t / L), dt, -D, D). (Sorry, WordPress isn’t all that good at this, and I wasn’t able to get MathJax to work). If we think of the the complete cycle as going from -1 to 1, then L = 1, and we can work this out: the amplitude of the carrier turns out to be 2.0 * sin(π * D) / π. We can make a graph, showing what the amplitude of the sine wave at the carrier frequency will be for varying duty cycles.

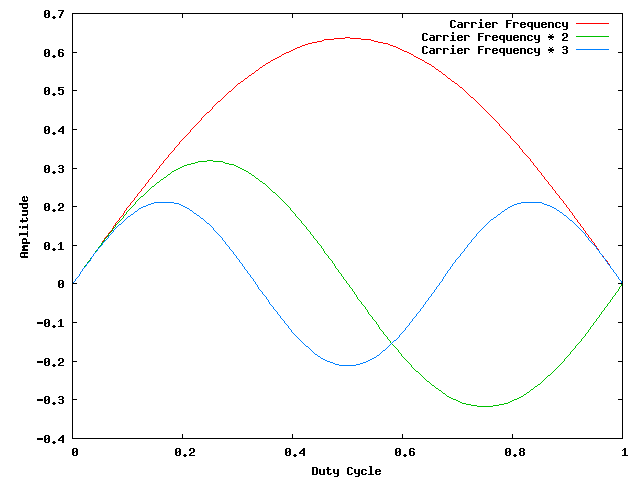

What does this mean? If we shift the duty cycle of our PWM waveform, we actually are modifying the amplitude (and therefore the power) of the transmitter output at the carrier frequency. As we deviate more from 0.5, we get more and more energy in the higher harmonics of the carrier frequency.

I’m sure that was about as opaque an explanation as possible, but it suggests to me a simple software simulation that I might code up this weekend to test my understanding.

Stay tuned.

Addendum: We can work out the relative amplitudes of the first three multiples of the carrier frequency: