I recall burning three or four weeks of a sabbatical getting Saccade.com on the air with Wordpress. So much tweaking…

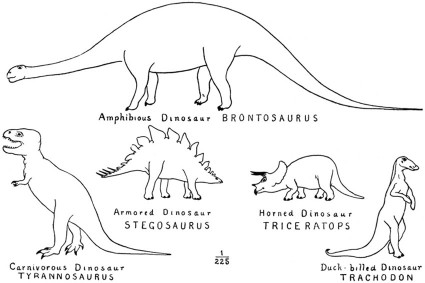

Realization: I’m a dinosaur…

About once a year, I get the urge to push my programming skills and knowledge in a new direction. Some years, this results in some new (usually small) raytracers. Sometimes, it results in a program that plays a moderately good game of checkers. Other years, it results in code that predicts the orbits of satellites.

For quite some time, I’ve been pondering actually trying to develop some modest skills with GPU programming. After all, the images produced by state of the art GPU programs are arguably as good as the ones produced by my software based raytracers, and are rendered factors of a hundred, a thousand or even more faster than the programs that I bat out in C.

But here’s the thing: I’m a dinosaur.

I learned to program over 30 years ago. I’ve been programming in C on Unix machines for about 28 years. I am a master of old-school tools. I edit with vi. I write Makefiles. I can program in PostScript, and use it to draw diagrams. I write HTML and CSS by hand. I know how to use awk and sed. I’ve written compilers with yacc/lex, and later flex/bison.

I mostly hate IDEs and debuggers. I’ve heard (from people I respect) that Visual Studio/Xcode/Eclipse is awesome, that it allows to edit code and refactor, that it has all sorts of cool wizards to write the code that you don’t want to write, that it helps you remember the arguments to all those API functions you can’t remember.

My dinosaur brain hates this stuff.

By my way of thinking, a wizard to write the parts of code you don’t want to write is writing code that I probably don’t really want to read either. If I can’t keep enough of an API in my head to write my application, my application is either just a bunch of API calls, inarticulately jammed to together, or the API is so convoluted and absurd that you can’t discern it’s rhyme or reason.

An hour of doing this kind of programming is painful. A day of doing it gives me a headache. If I had to do it for a living, my dinosaur brain would make me lift my eyes toward the heavens and pray for the asteroid that would bring me sweet, sweet release.

Programming is supposed to be fun, not make one consider shuffling off one’s mortal coil.

Okay, that’s the abstract version of my angst. Here’s the down to earth measure of my pain. I was surfing over at legendary demoscene programmer and fellow Pixarian Inigo Quilez’s blog looking for inspiration. He has a bunch of super cool stuff, and was again inspiring me to consider doing some more advanced GPU programming. In particular, I found his live coding thing to be very, very cool. He built an editing tool that allows him to type in shading language code and immediately execute it. It seemed very, very cool. Here’s an example YouTube vid to give you a hint:

I sat there thinking about how I might write such a thing. I didn’t feel a great desire to write a text editor (I think I last did it around 1983) so my idea was simple: design a simple OpenGL program that drew a single quad on the screen, using code from a vertex/fragment shader that I could edit using good old fashioned vi. Whenever the OpenGL program noted that the saved version of these programs had been updated, it would reload/rebind the shader, and excecute it. It wouldn’t be as fancy as Inigo’s, but I figured I could get it going quickly.

While I have said I don’t know much about GPU programming, that strictly speaking isn’t true. I did some OpenGL stuff recently, using both GLSL and even CUDA for a project, so it’s safe to say this isn’t exactly my first rodeo. But this time, I thought that perhaps I should do it on my Windows box. After all, Windows probably still has the best support for 3D graphics (think I) and it might be of more use. And besides, it would give me a bit broader skill base. Not a bad thing.

So, I downloaded Visual Studio 2010. And just like the Diplodocus of old, I began to feel the pain, slowly at first, as if some small proto-mammals were gnawing at my tail, but slowly growing into a deep throbbing in my head.

On my Mac or my Linux box, it was pretty straightforward to get OpenGL and GLUT up and running. Being the open source guy that I am, I had MacPorts installed on my MacBook, and a few judicious apt-get installs on Linux got all the libraries I needed. On the Mac, the paths I needed to know were mostly /opt/local/{include/lib} and on the Linux box, perhaps /usr/local/{include/lib}. The same six line Makefile would compile the code that I had written on either platform if I just changed those things.

But on Windows, you have this “helpful” IDE.

Mind you, it doesn’t actually know where any of these packages you might want to use live. So, you go out on the web, trying to find the distributions you need. When you do find them, they aren’t in a nice, self-installing format: they just naked zip files, usually with 32 bit and 64 bit versions, and without even a good old .bat file to copy them to the right place for you. On Mac OS X/Linux, I didn’t even need to know if I was running 64 bit or 32 bit: the package managers figured that out for me. On Windows, the system with the helpful IDE, I have to know that I need to copy the libs to a particular place (probably something like \Program Files(x86)\Microsoft SDKs\Windows\v7.0A\Lib) and the include files to somewhere else, and maybe the DLLs to a third place, and if you put a 32 bit DLL where it expected a 64 bit one (or vice versa) you are screwed. But even after dumping the files in those places, you still have to configure your project wizard to add these libraries, by tunneling down through the Linker properties, under tab after tab. Oh, and these tabs where you enter library names like freeglut32.lib? They don’t even bring up the file browser, not that you would really want to go grovelling around in these directories anyway, but at least there would be a certain logic to it.

And then, of course, you can start your Project. Go look up a tutorial on doing a basic OpenGL program in Visual C++, and they’ll tell you to use the Windows Wizard to create an empty project: in other words, all that vaunted technology, and they won’t even write the first line of code for you for your trouble.

After all this, I got to the point where I could hit F5 to compile, and what happened? It failed to compile my simple (and proven on other operating systems) code, with the message:

Application was unable to start correctly (0xc000007b)

You have got to be kidding me. When did we transport ourselves back to 1962 or so when a numerical error code might have been a reasonable choice? If your error code can’t tell you a) what is wrong and b) how to fix it, it’s absolutely useless. You might as well just crash the machine.

This was the start of hour three for me. And it was the end of Visual Studio. I must compliment Microsoft on their uninstaller: it worked perfectly, the first actual success of the day.

Undoubtedly some of you out there are going to proclaim that I’m stupid (probably true) or inexperienced with it (certainly true) and that if I just kept at it, all would be well. These are remarkably similar to the claims that I’ve heard from other religions, and I have no reason to believe that the “Faith of Video Studio” will turn out any better than any of the other religions.

Perhaps before you post, you should just consider that I warned you that I was a dinosaur when I began, and perhaps I was right, at least about that.

Luckily, along with this project, I’ve been thinking of another dimension along which I could develop some new skills, and it turns out that this stuff appears to be more in line with my dinosaur sensibilities, and that’s the Go Programming Language.

Go has some really cool ideas and technology inside, but at it’s core, it’s simply seems better to my dinosaur brain. The legendary Rob Pike does a great job explaining it’s strengths, and almost everything he says about Go resonates with me and addresses the subject of the long rant above. Here’s one of his great talks from OSCON 2010:

I know it will be hard to get my mind wrapped around it, but I can’t help but think it will be a more pleasant experience than the three hours I just spent.

I know the GPU thing isn’t going to go away either. I just kind of wish it would.

Comments

Comment from Aram H?v?rneanu

Time 8/8/2012 at 6:29 am

I agree wholeheartedly. I started with IDEs, didn’t like the bureaucracy and noise but didn’t think much about it. It was the status quo, I assumed this is the best we could do at that point, and everything else was inferior. I was thought that IDEs help you tremendously and I didn’t question this belief.

I then moved to more interesting projects, which by their very nature didn’t allow usage of IDEs, and had to learn the old, simpler way. I haven’t looked back since. I don’t miss the IDE days. I think I feel about those days as older people probably feel about the batch processing era now. You had to give source code to a lady to punch, then move punched cards into a queue, then come back tomorrow after the compilation error, repeat, repeat, repeat. Get all this process and procedure out of my way!

Helpful wizards that generate code are another pet peeve of mine. Even when I used IDEs I hated those and fought hard against having to use them (unsuccessfully, most of the time). When I am programming I probably type code 3%, maybe 5% of my time. The rest of the time is spent thinking and debugging. Optimizing that 3% seems silly, especially considering that the 97% is concerned with reading code, not writing it. Readability is seldom mentioned, but it’s very important, and wizards and autocomplete and whatnot allow for lazy code, verbose code that treats clarity with disdain.

Source code generation is a powerful feature. Tools like yacc are great, but such tools produce an opaque blob that also happens to be valid source code. Helpful wizards produce code that is supposed to be read and used like it was written for human consumption, however.

Go is great, I’m very happy to have found an employer that pays me to write Go code. Lots of people that do hip things like web programming, ruby, and python like it, but I like it because it keeps the simplicity of C and make programming fun again. Sure, a idea is nice irrespective of the programming language, but Go allows me to implement the nice idea while enjoying the time I spend doing so.

Comment from Elwood Downey, WB0OEW

Time 8/8/2012 at 8:48 am

I agree also. IDEs are so full of magic it’s much harder to know what they want and what they are doing than using the command line. In addition to the ones you have mentioned, I also use one from Texas Instruments called Code Composer Studio for their family of DSP and motor controllers. It is a constant struggle. I finally found all their magic cross compiler commands and switches and built my own Makefiles and just edit the source with vim (thank goodness for vim on Windows). Now I’m much much more productive. The command line is dead — long live the command line!

Comment from Robert Nesius

Time 8/8/2012 at 7:40 pm

I’m curious to see how Go sucks less as a statically-typed language. I couldn’t help but think of D when he described Go’s features. I haven’t done anything with D but have studied it from afar. I LOL’d at his libboost example – I hope I never have to touch boost.

My big problem with IDE’s is when they completely obfuscate the file-structure and layout of a program. I like IDE’s where a “project” is literally a directory and the structure of the project is mirrored in the filesystem.

I’m a huge vim fan myself, but for javascript, css, html… modern text editors sadly trounce vim. I recently invested a lot of effort to get vim on par with textmate for javascript and ruby – I couldn’t quite get all the way there, though I did make some big strides. Kind of a bummer as I think the limitation is VIM’s macro-language.

Comment from Twylo

Time 8/8/2012 at 11:14 pm

I just finished running SYSGEN on RT-11 5.3 on a real PDP-11/23+ sitting in my office — yes, for fun! — so I do have some appreciation for the simpler things in life. At work my primary development environment is emacs, as it has been for many years. Motion memory of the emacs keybindings makes me pretty productive in it, too!

But I say all that more or less just to get some bona fides out of the way, because recently my thoughts have been dwelling on this topic in a different direction. I think you and I agree on the premise that the tools that we have suck. They are shockingly bad. Eclipse, Visual Studio, name your IDE, they’re basically text editors with a bunch of ugly awkward tools bolted on that will help you type less if you know how to use them, but mostly they help you type less by shitting boilerplate code into your document (forgive my language). And how sad is this? My old friend emacs is 35 years old, it started life as a bunch of macros hacked onto TECO on a PDP-10. Now I have the processing power of dozens of PDP-10s _in my phone_, and our tools are only incrementally better (and in some ways, WORSE!). Douglas Engelbart’s dream of augmenting the human intellect hasn’t quite panned out, and yet if you go and look at his 1968 NLS demo, you wonder, why?? How could it not have? Look what they did with such primitive hardware!

I watched a video earlier this year that kind of gave me a kick in the rear, in a good way. I found it to be both inspiring and eye opening. Though a very significant part of it focuses on the problem of tools and how they can be so, so much better, it’s also about creativity, play, exploration and problem solving in general. I totally recommend it. It’s long, but it’s really worth it. It’s called “Inventing on Principle”, by Brett Victor.

It also inspired me to help fund the “Light Table” project on Kickstarter, which is developing a Clojure / Javascript / Python IDE heavily influenced by Brett Victor’s ideas.

Man. I get really riled up by this topic now. It could be so much better than it is, but it’s not, and it’s so frustrating!

But for now, just for now… I’m still plugging away in emacs in a terminal.

Comment from Dan Lyke

Time 8/9/2012 at 2:04 pm

I’ve done extensive work in the Visual Studio IDE, and I think that there’s a bifurcation that’s happened in programming in the time I’ve been doing it. Visual Studio is a tool for people who are hooking together components that other people wrote. All of the wacky inconsistencies in the .NET API fall away when you have all of that autocomplete stuff, so you don’t have to remember what the methods on this object to extract data are, or why this one takes an array and a length and the other takes a length and an array.

It’s also very helpful that it’s hard to add third party stuff that isn’t written to “The Microsoft Way”. Remember, these are the same people who’ve been trying to tell us for a decade and a half now that they had to throw away OpenGL for DirectX because DirectX was faster, and for that same decade and a half, OpenGL on their platform has been faster (from the early software implementations of both back in ’95-96, to the recent Valve experiences in optimizing the Linux port and finding that when those changes were moved back over into Windows, OpenGL was once again faster). So it’s clear that Microsoft’s goal with VisualStudio is to lock people in to the Microsoft way of thinking so that they’re not capable of generating code without the training wheels.

On the other side, we have the people using Makefiles, vim, emacs, and the like, and those people are in to developing algorithms. I find that when I have to do algorithmic stuff (the last one of those I wrote was doing piping on vector shapes, expanding font characters in all directions and clipping off the resulting holes and loops and such) I quit Visual Studio and write in Emacs. Then I put the code back in VS and tie the UI together.

So, yeah: You’re not a dinosaur, you’re just in the class of people who are developing algorithms rather than plugging building blocks together.

Comment from Elwood Downey

Time 8/9/2012 at 8:36 pm

This thread is Brainwagon at its very best. Thank you all.

Comment from Steve VanDevender

Time 8/10/2012 at 5:50 pm

I think what makes C and UNIX continue to be attractive to skilled programmers is that they were built by skilled programmers for other skilled programmers. IDEs appear to be built by mediocre programmers for other mediocre programmers to make the things that medicore programmers do easier, like creating large repetitive chunks of code, where skilled programmers would automate such things away or extend languages to make them more expressive.

Admittedly C and the UNIX development environment were made in the days when interactive terminals were new and exciting and computers were almost a million times slower than they are now, and that makes them look like dinosaur technology. Maybe we need the modern equivalents of Thompson and Ritchie and Kernighan to develop a new computing environment that takes advantage of fast graphics and fast multiple processors to show us how much more productive we could be if we were no longer bound by the successes of the past.

I can second the recommendation for the Bret Victor talk cited above, even though I think it’s just the merest hint of what might be possible if we could truly usefully integrate a GUI with traditional textual programming.

I got out of programming and into system administration long ago, but I also get that dinosaur feeling when I try to teach students how to use traditional command-line tools and get away from the GUIs that they install simply because they have little experience with using textual interfaces. Amazingly enough I have heard that even recent Windows server releases now install without a GUI. Apparently even Microsoft has realized what UNIX users have known for so long — that managing complex system configurations and large numbers of systems is far more efficient, flexible, and automatable with text-based configuration.

Comment from Aram Havarneanu

Time 8/11/2012 at 9:19 am

There’s a Server Core role in new versions of Windows server that doesn’t include the GUI shell and other stuff, but you don’t manage it Unix style. You are not supposed to login to it in any way, locally or remotely, instead, you manage it via the (GUI) MMC console from a different computer.

All this stuff exposes a COM/.NET API so you can script it (in fact the MMC console is just a client for this API) but it’s a PITA compared to writing some shell scripts…

Comment from Mike K

Time 8/29/2012 at 4:34 pm

But if you’re a bird dinosaur you’ve got a good chance of surviving the modern era. 🙂

Comment from mostafa ramadan

Time 8/8/2012 at 5:31 am

Hi man. You are not a dinosaur. Vast amount of programmers hate all forms of IDE. I’m 26 and I prefer vim/notepad++ instead of fully blown IDE 🙂 From my observation a LOT of C programmers on (especially on unix/linux) are like that.