A couple of years back, I made note of HAKMEM 175, a nifty hack by Bill Gosper that finds the next higher value that has the same number of ‘1’ bits as the input.

brainwagon » Blog Archive » HAKMEM 175.

If I bothered to convert it to C, I didn’t scribble it down, so I thought I’d do it here.

[sourcecode lang=”C”]

#include <stdio.h>

/* _ _ _ _ ____ __ ___ __ __ _ ____ ___

* | || | /_\ | |/ / \/ | __| \/ / |__ | __|

* | __ |/ _ \| ‘ <| |\/| | _|| |\/| | | / /|__ \

* |_||_/_/ \_\_|\_\_| |_|___|_| |_|_|/_/ |___/

*

* A straightforward implementation of Gosper’s algorithm for

* determining the next higher value which has the same number of 1

* bits. This is useful for enumerating subsets.

*

* Translated by Mark VandeWettering.

*/

unsigned int hakmem175(unsigned int A) { unsigned int B, C, D ;

B = A ; /* MOVE B, A */

C = -B ; /* MOVN C, B */

C &= B ; /* AND C, B */

A += C ; /* ADD A, C */

D = A ; /* MOVE D, A */

D ^= B ; /* XOR D, B */

D >>= 2 ; /* LSH D, -2 */

D /= C ; /* IDIVM D, C */

A |= D ; /* IOR A, C typo? */

return A ;

}

main()

{

unsigned int x ;

int i ;

x = 3 ;

do {

printf("0x%x\n", x) ;

x = hakmem175(x) ;

} while (x != 0) ;

}

[/sourcecode]

I was surprised to find that there is actually a bug in the published memo. The last instruction should obviously be an OR of A and D, not A and C as listed in the published memo. After discovering the error, I seeked to find mention of it onlne somewhere. This page lists the corrected PDP-10 assembly code without comment. It also suggests a couple of optimizations: you can save one register and one instruction by variable renaming, and with a bit of work, you can avoid the integer divide, which is probably a good thing on pretty much any architecture you are likely to use.

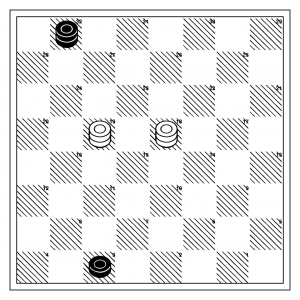

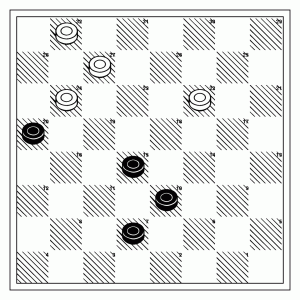

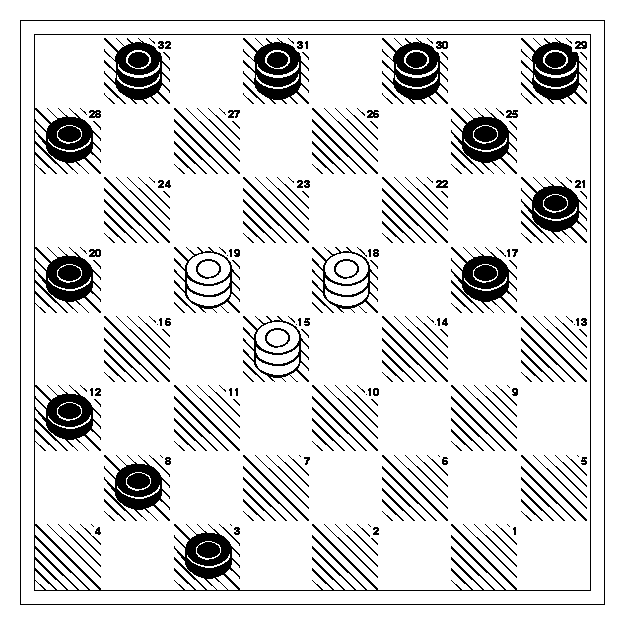

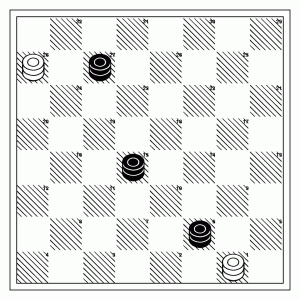

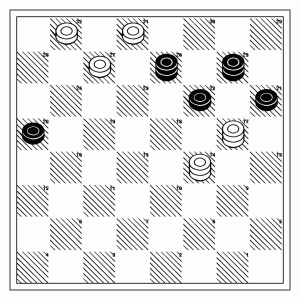

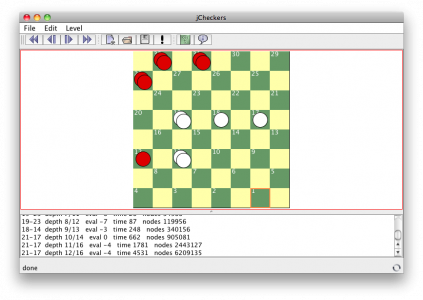

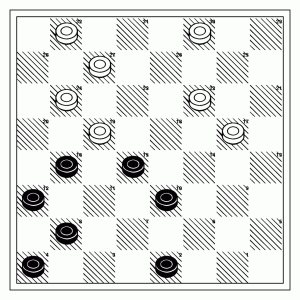

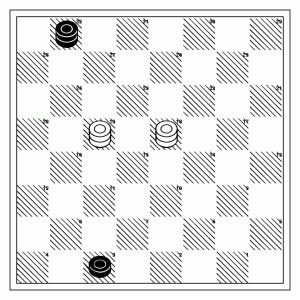

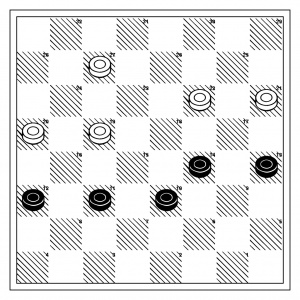

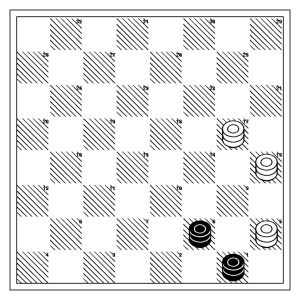

Addendum: I thought it might be fun to show an illustration of how this could be used. At one point, while writing my checkers program, I thought about enumerating all possible positions with given numbers of checkers on each side. Counting them is actually rather easy, but enumerating them is a bit trickier, but using the trick of HAKMEM175, it becomes somewhat easier.

[sourcecode lang=”C”]

#include <stdio.h>

#include <stdlib.h>

unsigned int

hakmem175 (unsigned int A)

{

unsigned int B, C, D;

B = A; /* MOVE B, A */

C = -B; /* MOVN C, B */

C &= B; /* AND C, B */

A += C; /* ADD A, C */

D = A; /* MOVE D, A */

D ^= B; /* XOR D, B */

D >>= 2; /* LSH D, -2 */

D /= C; /* IDIVM D, C */

A |= D; /* IOR A, C typo? */

return A;

}

/*

* Here’s an interesting use (okay, semi-interesting use) of the

* above function. Let’s use it to enumerate all the potential

* positions in checkers which have two checkers (not kings) for

* each side.

*/

#define WHITEILLEGAL (0x0000000F)

#define BLACKILLEGAL (0xF0000000)

int

main (int argc, char *argv[])

{

unsigned int B, W;

int nb = atoi (argv[1]);

int nw = atoi (argv[2]);

if (nb < 1 || nb > 12 || nw < 1 || nw > 12) {

fprintf (stderr, "usage: checkers nb nw\n");

fprintf (stderr, " 1 <= nb, nw <= 12\n");

exit (-1);

}

B = (1 << nb) – 1;

for (;;) {

W = (1 << nw) – 1;

for (;;) {

while (W != 0 && (W & (B | WHITEILLEGAL)))

W = hakmem175 (W);

if (W == 0)

break;

/* output B, W, we could be prettier */

printf ("%x %x\n", B, W);

W = hakmem175 (W);

}

B = hakmem175 (B);

while (B != 0 && (B & BLACKILLEGAL))

B = hakmem175 (B);

if (B == 0)

break;

}

return 0;

}

[/sourcecode]

Using this, you can easily enumerate the 125,664 positions with 2 checkers on each side, the 8,127,272 positions with 3 checkers on each side, and the 253,782,115 positions with 4 checkers on each side. By then, the code gets a little slow: it has to step over all the positions where there is a conflict in B and W placement, so it gets pretty slow. Still, it works reasonably well, and as such, might serve as part of the inner loop of a retrograde endgame analysis project.