Okay, it’s been a long time since I wrote anything here. Not really a lot dramatic going on in life, I just have been spending my free time writing for Quora rather than my own blog. But I still am nerding out from time to time. Last night I dusted off an old project of mine and carried it a bit further…

I had written a short post before about a simulator I wrote for Caxton Foster’s “Blue” computer architecture described in his book Computer Architecture. I have the third edition published in 1985. The “Blue” architecture is ridiculously simple: only sixteen instructions, 4096 words of memory, and only one addressing mode. If you click back to my previous post, you’ll see a complete behavioral simulator for it, written in less than an hour.

Somehow this came back up, and I was suddenly intrigued by the idea of trying to write a simple program for it, just to test my ideas about how awful such a machine would be. Ultimately, my goal is to write a simple program that can compute the value of pi to around 100 decimal places. Such a program seems doable, but it’s not a complete cakewalk. But to achieve this, I thought it might be fun to write a simple assembler.

So, I shook the cobwebs that have covered the bits of my brain that understood yacc/lex/bison/flex and wrote a simple assembler for the architecture. It totals only about 250 lines of code, but can handle symbolic labels and all the instructions. It took me about two hours of wall clock time, with my attention split between programming and watching the latest episode of Grimm.

I doubt the assembler is of any real interest, but eventually I’ll get the code onto github.

I then sat down this morning, and wrote this simple program to take the signed value at the memory location VALUE and print it as a signed decimal number. It prints the sign then five digits, with leading zeros if necessary. I didn’t bother to comment

the code, but here it is…

[sourcecode lang=”text”]

PRINTVALUE:

LDA VALUE

JMA NEG

LDA PLUS

OUT

POS:

LDA S0

STA TMP0

SRJ DODIGIT

LDA S1

STA TMP0

SRJ DODIGIT

LDA S2

STA TMP0

SRJ DODIGIT

LDA S3

STA TMP0

SRJ DODIGIT

LDA S4

STA TMP0

SRJ DODIGIT

HLT

DODIGIT:

IOR JMPBIT

STA RETURN

LDA ZERO

STA DIGIT

LOOP: LDA VALUE

ADD TMP0

JMA DONE

STA VALUE

LDA DIGIT

ADD ONE

STA DIGIT

JMP LOOP

DONE:

LDA DIGIT

ADD FE

OUT

RETURN:

JMP 0

NEG:

LDA MINUS

OUT

LDA VALUE

XOR ONES

ADD ONE

STA VALUE

JMP POS

VALUE: .WORD 12323

TMP0: .WORD 0

DIGIT: .WORD 0

S0: .WORD -10000

S1: .WORD -1000

S2: .WORD -100

S3: .WORD -10

S4: .WORD -1

ZERO: .WORD 0

ONE: .WORD 1

ONES: .WORD $FFFF

JMPBIT: .WORD $A000

MINUS: .STRING "-"

PLUS: .STRING "+"

FE: .WORD 48

[/sourcecode]

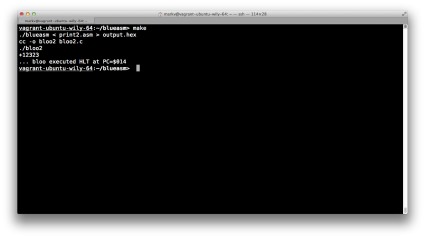

The assembler spits out a series of 16 bit words which can be loaded into my

simulator…

[sourcecode lang=”text”]

0x602B, 0x9024, 0x6038, 0xC000, 0x602E, 0x702C, 0x8014, 0x602F,

0x702C, 0x8014, 0x6030, 0x702C, 0x8014, 0x6031, 0x702C, 0x8014,

0x6032, 0x702C, 0x8014, 0x0000, 0x4036, 0x7023, 0x6033, 0x702D,

0x602B, 0x102C, 0x9020, 0x702B, 0x602D, 0x1034, 0x702D, 0xA018,

0x602D, 0x1039, 0xC000, 0xA000, 0x6037, 0xC000, 0x602B, 0x2035,

0x1034, 0x702B, 0xA004, 0x3023, 0x0000, 0x0000, 0xD8F0, 0xFC18,

0xFF9C, 0xFFF6, 0xFFFF, 0x0000, 0x0001, 0xFFFF, 0xA000, 0x002D,

0x002B, 0x0030,

[/sourcecode]

The data above gets simply compiled into my simulator and runs.

Okay, so what’s fun to note about this?

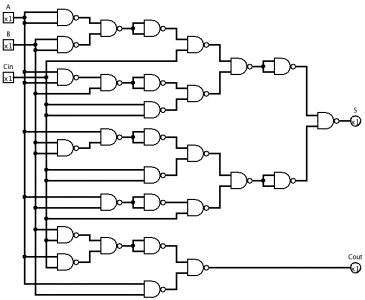

There is only a single register, the accumulator. You spend a LOT of time loading things, modifying the value, and storing them back into main memory. There is no “immediate mode”, so you have to create memory locations to store common values like “1” and “0”. DODIGIT is a subroutine, which is called by the SRJ instruction. SRJ puts the PC into the accumulator, and then jumps to the specified address. To “return”, you OR in the bits to the accumulator to form a JMP instruction, and then store that at the end of your subroutine. There is no call stack. Although not demonstrated particularly here, the processor does not implement indexing or indirection. There is also no real condition registers such as CARRY or OVERFLOW, so implementing multi-precision arithmetic might be a challenge.

Foster’s book also details additions to this architecture, eventually becoming INDIGO, an architecture which is rather similar to a PDP-8.

Sure, if you are going to really learn computer archtictures, Patterson and Hennessy is probably a better path, but there is something like of pleasing in this archaic trip down memory lane. My pi computing program will percolate in my brain for a bit, and will find implementation on some other evening.

Addendum: Hackaday recently posted about Al Williams’ FPGA implementation of an architecture which is based upon Foster’s Blue machine. I believe he made a number of changes that make it more practical, but it might be worth looking at. He wrote a monitor, which seems to use opcodes which aren’t compatible with mine.

Addendum2: Pondering it some more throughout the day, it seems difficult to implement my idea of a pi computing program for this virtual machine. All of the algorithms that I can think of require 32 bit numbers, and the Blue architecture simply lacks the capabilities needed to implement it. It also is pretty weak on conditional statements: the only condition that you can branch on is the sign bit of the accumulator.