This morning, I woke up and was trying to resolve a problem: why is Milhouse (my checkers program) so slow? The answer didn’t seem entirely obvious, so I pondered it some more. I refined it to “why is Milhouse so slow when it accesses the endgame database files?” I think I know why, but unfortunately, it will require some additional thought and work to remedy.

To explain, let me recap what happens. Put in simple terms, Milhouse does an alpha-beta search to some depth. As it is traversing in depth first order, it may realize that certain paths are futile (it can prove that no leaf in the subtree will be the right move regardless of what value it has, even without searching). Eventually, you’ll get down to a leaf node, and you’ll “evaluate” it (run a simple function which gives a figure of merit). These values get “backed up” through the tree and one of them ultimately wins as the value of the search.

In other words, every time you search, the score that you get is the evaluation of some node on the search frontier.

Let’s imagine for a moment that you have a simple evaluation function. Say you count men (unpromoted checkers) as 100 points and kings as 130 points, and the evaluation is the sum of all your scores minus the sum of your opponents scores. That’s certainly a reasonable, but relatively un-knowledgeable evaluation function, but it would “work”. The problem is this: it doesn’t differentiate very finely. Many, many positions on the search horizon will have identical scores. This significantly reduces the efficiency of search, because the “backed up values” can’t do their job efficiently: there are many subtrees with nodes on the search frontier that look just as good as the nodes you’ve already looked at. Hence, you end up searching and ultimately evaluating many more nodes than you could if you took more information into account.

Ultimately then, “knowledge is power”. Improving the evaluation function to include more information has an effect on overall search efficiency. The more knowledge it has in being able to differentiate between the merits of two positions, the fewer overall nodes it will have to evaluate in search (the fewer subtrees it will have to resolve all the way to the leaves).

My evaluation function doesn’t understand anything about runaway checkers or trapped kings, and therefore it has to use deeper searches to resolve situations that include these features. When I’ve played against Cake, I’ve noticed that not only does Cake search deeper faster, but Cake seems to recognize that it’s got me in trouble in searches of much shallower depth (sometimes as many as five moves earlier).

So, I’ve got to help Milhouse learn more about checkers. I can do this two ways: one, by learning more about checkers myself, and putting some of that knowledge into Milhouse. The second, by learning more about machine learning, and then allowing Milhouse to learn more itself, either through self-play or training.

I suspect that machine learning might be more productive, but I’m not entirely convinced. I’ll have to give it a bit more thought.

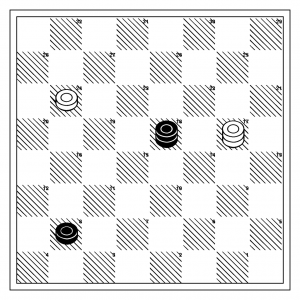

Addendum: Okay, that was highly abstract, let’s work through an example. I’ve been trying to absorb some knowledge from Checkers legend Derek Oldbury, whose Move Over is available online. Here’s Diagram 17, a fairly simple position:

If it were Black’s turn to move, it would be a draw (both men promote, and a boring 2×2 King draw ensues) but if White is to move, clearly, he can’t move his king (backing away pins him against the wall, advancing is suicide), so he must move his man. Putting him against the wall is hopeless, the black man takes the opposing position and waits to jump him. Therefore, the line goes 24-19 8-11 19-15 18-22 15×8 22×13. There is an exchange of material, but while white will king his man, he’s still doomed, as he can’t escape to the safety of the double corner. Here’s how the search looks using milhouse without its endgame database:

milhouse: restore diagram.17

board restore from diagram.17

White

+--------+

| - - - -|

|- - - - |

| w - - -|

|- - R W |

| - - - -|

|- - - - |

| r - - -|

|- - - - |

+--------+

Red

... White to move

... FEN: W:WK17,24:B8,K18

milhouse: id

+1 : [-11] 0.00s

: 24-19

+3 : [-15] 0.00s

: 24-19 8-12 17-13

+5 : [-12] 0.00s

: 24-19 8-11 19-15 11-16 15-10

+7 : [-19] 0.00s

: 24-19 18-23 19-16 23-18 16-12 8-11 12-8

+9 : [-16] 0.01s

: 24-19 8-12 17-21 18-22 19-15 12-16 15-10 16-19 10-6

+11 : [-12] 0.02s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-8

: 9-14 8-11

+13 : [-14] 0.03s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-8

: 9-14 8-11 14-18 11-16

+15 : [-17] 0.10s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-8

: 9-14 8-11 14-18 11-16 18-15 16-12

... researching after failing low (-42)

+17 : [-9983] 0.30s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-8

: 9-6 8-11 6-10 11-16 10-15 16-12 15-11 12-16

final score = -9983

0.327 seconds elapsed.

... 231735 nodes searched, 65777 nodes evaluated

... 30493 alpha nodes, 5483 pv nodes, 65965 beta cutoffs

... avg branching factor 1.12

... 11096 nodes ordered

... 19060 pv hits, 16916 pv misses (52.98%)

transposition tables

... 166190 gets (85501 tier0 / 1072 tier1 / 79617 failed)

... 101941 puts (85701 tier0 / 16240 tier1) (30493 alpha, 5483, 65965 beta)

... 0 chinook database accesses.

The output bears some explanation. it uses iterative deepening to search, adding two ply to each search as it deepens. So, it starts out with one ply depth, then three, then five, and so on, up until it discovers a winning line with a 17 ply search (it’s actually a 19 ply winning line, quiescence search makes it resolve the final jump that wins the game). You’ll notice that at each search depth, it believes 24-19 to be the best move (it is) but that right up until the realization that the game is lost, (a 15 ply search) it believed the game to be essentially even. Had I encountered the starting position at the leaf node of a search, it might have believed it could salvage a draw from this positon, and selected it over a position which actually provided a draw.

Now, let’s look at the same search, but using the endgame database.

board restore from diagram.17

White

+--------+

| - - - -|

|- - - - |

| w - - -|

|- - R W |

| - - - -|

|- - - - |

| r - - -|

|- - - - |

+--------+

Red

... White to move

... FEN: W:WK17,24:B8,K18

(DB W LOSES) milhouse: depth 99

search depth is 99

(DB W LOSES) milhouse: id

+1 : [-7614] 0.00s

: 17-13

+3 : [-7614] 0.00s

: 17-13 18-14 24-19

... researching after failing low (-7712)

+5 : [-7712] 0.01s

: 17-13 18-14 13-9 14x5 24-19

... researching after failing low (-7808)

+7 : [-7808] 0.01s

: 24-19 8-11 19-15 18-22 17x26 11x18 26-30

+9 : [-7810] 0.01s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-7

+11 : [-7812] 0.02s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-7

: 9-14 7-2

+13 : [-7812] 0.02s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-7

: 9-14 7-3 14-10 3-8

+15 : [-7812] 0.03s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-7

: 9-14 7-3 14-17 3-7 17-14 7-3

... researching after failing low (-9983)

+17 : [-9983] 0.04s

: 24-19 8-11 19-15 18-22 15x8 22x13 8-3 13-9 3-7

: 9-14 7-3 14-10 3-8 10-15 8-12 15-11 12-16

final score = -9983

0.075 seconds elapsed.

... 27737 nodes searched, 4147 nodes evaluated

... 4573 alpha nodes, 124 pv nodes, 9520 beta cutoffs

... avg branching factor 1.09

... 55 nodes ordered

... 3388 pv hits, 1309 pv misses (72.13%)

transposition tables

... 23599 gets (9081 tier0 / 15 tier1 / 14503 failed)

... 14217 puts (14125 tier0 / 92 tier1) (4573 alpha, 124, 9520 beta)

... 3852 chinook database accesses.

(DB W LOSES) milhouse:

In spite of the fact that it loaded 3852 positions from the database cache, it actually ran 7x faster. You can see that it searched and evaluated many fewer nodes. The highly negative scores even at the initial search depth of one means that it knows this position is lost immediately, so it effectively gives you the benefit of an additional 17 ply of search, should this position show up as a leaf in the search tree. What is needed is to create an evaluation function which more closely predicts the value of deeper searches without the expense.

Addendum2:

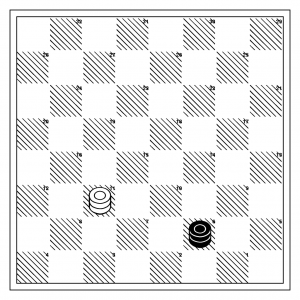

Here’s an example position that causes difficulty without the endgame database:

Without the endgame databse, milhouse scores this position as +6 for red, but of course, it’s actually won (it’ll take about 7 more ply of search depth to discover). If you look at how it scores the possible moves, you get:

analyzing board position... -6 : 6-1 11-15 ... -4 : 6-2 11-15 ... +5 : 6-9 11-15 ... +12 : 6-10 11-8 ...

You’ll see it selects the right move, but the reality is that the first three moves are all draws, where the last move allows the win.