How much time is spent refreshing my 8 digit LED display?

A couple of days ago, I wrote about experimenting with a little Chinese 8 digit, 7 segment LED module that is driven by some 74HC595 shift registers. My initial experiment in driving it with the Teensy was successful: I got a nice, steady display with a simple interrupt driven scheme which displays each digit for two milliseconds before moving on to the next. But I wondered what percentage of my total time was actually being spent refreshing this display. In particular, how fast was the interrupt routine?

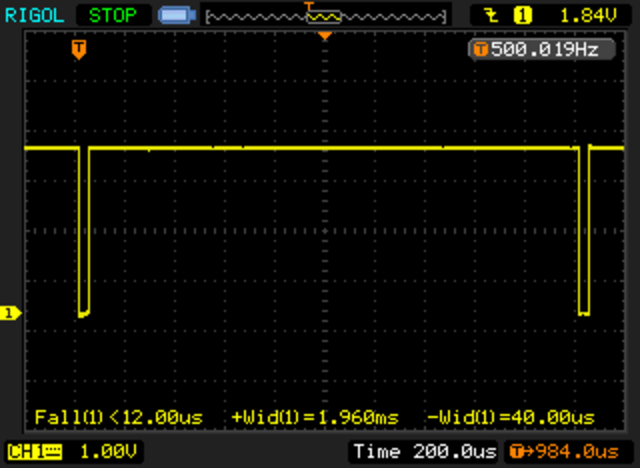

Most of the time spent in the interrupt routine is presumably spent in serially clocking out the individual bits to the shift register, using the Arduino library function shiftOut. I thought about adding some timers and computing what the time left, but this morning, I realized that I could use my nifty Rigol scope to figure out how long it took, by looking at the LATCH signal. Before clocking the data, the LATCH signal is brought low, and then afterwards brought high again. There are a couple of instructions on either side, but it’s not a bad approximation.

When I got home, I hooked up the scope.

Nifty! The total time accounts for 40 microseconds out of every 2000 microseconds, or about 2% of the total runtime for the Teensy. Nice! Works great. My guess is that it would be significantly more as a percentage with a traditional Uno. Perhaps I’ll try that out soon.

Addendum: I installed the same code on the Sparkfun Redboard (an Arduino Uno clone, which is an ATMEGA328 running at 16Mhz). With identical code, I used the same setup. The interrupt routine runs in about 360us, about 9x slower, consuming about 18% of the available CPU cycles. If you double the period to 4ms, you could halve this, but I find that the strobing of the ~33hz display to be slightly too much for me.

I recall burning three or four weeks of a sabbatical getting Saccade.com on the air with Wordpress. So much tweaking…