Monkeying around with the Lytro Camera…

A couple of people on my twitter feed yesterday (aside: I tweet using @brainwagon, and passed 5000 tweets yesterday) had questions about how this light field camera worked, how fast the sensor was, how long it takes to acquire the image, etc… While this is the first Lytro camera I’ve ever had the time to tinker with, I did spend a couple of years doing R&D on computational photography in general and light field photography in particular, so I am pretty familiar with how these things work, and combined with information like the Lytro Meltdown and the lfp splitter, I was able to tear apart my example the files for the example “monkey” picture I took yesterday. For completeness:

So, how does the Lytro take pictures that can do this?

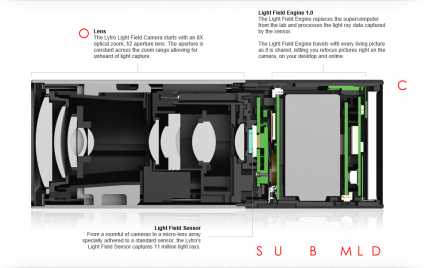

First, let’s take a look at the cross section of the camera, thoughtfully provided from Lytro on the web:

Despite it’s kind of primitive outer appearance, inside it’s remarkably complex. As someone who played a small part in the optical design of a similar camera, it can be remarkably tricky. But you might look at it and say: “Gosh, it’s a telephoto lens, big whoop! Where is the ‘secret sauce’?”

It’s in the area labelled light field sensor. Instead of having an ordinary CCD which simply samples the illumination on the focus plane at a bunch of individual locations, the light field camera has a micro lens array: an array of tiny lenses which allow the camera to not only measure the total illumination arriving at a location, but it’s distribution: what proportion of that light is arriving from each direction. It’s this property that will eventually allow the computational magic allows refocusing.

You probably aren’t able to visualize that very well (I certainly couldn’t when I began), but here’s an example which may (but probably won’t) help a bit. Even if you don’t completely get it, it’s kind of cool.

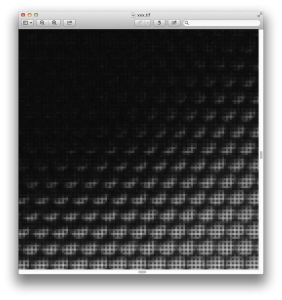

Using the lfpsplitter tools above, I extracted the “raw” pixel data from the monkey snapshot I did. If you are familiar with the way most cameras work, you might know that inside digital cameras is a sensor which can be thought of as an array of pixels. Some are sensitive to red, some green, some blue, usually arranged in an grid that is called a a Bayer filter or a Bayer mask. Software in your camera is responsible for looking at each individual R, G, and B pixel and combining them to produce RGB pixels of a resolution lower (usually by 1/2) of the native resolution of the sensor. The image below is a similar “raw” image of the sensor data coming from the Lytro. It is represented as monochrome values, each of which is 16 bits. It looks dark because all the processing of the Bayer filtering, exposure, color balance etc has not been done. The original images are 3280×3280, which I’ve shrunk down to fit on this page.

You can probably see monkey, but might ask, “again, what’s the deal? Seem just like a dark, bad image of the monkey?” Let’s zoom in.

And further?

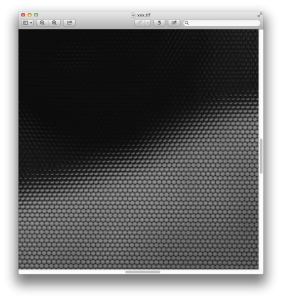

And finally down at the bottom, looking at individual pixels:

The large image is actually made up of little tiny circular images, packed in a hexagonal array. Each pixel is about 1.4 microns across. The circular images of each lenslet are about 13.89 microns across. The rectilinear “gridding” artifact you see is from the Bayer mask.

Pretty nifty.

The software that gets you from this raw image to the final image is actually non trivial, in no small part because the calibration is so difficult. But it’s awesome that I have a little gadget that can acquire these raw light fields (our prototypes were far bulkier).

Last night, I spent some time trying to understand the Wifi protocol, and wrote some code that was successful in receiving the callback messages from the camera, but had a bit more difficulty with understanding and getting the command messages to work. The idea is to create a set of Python programs that will allow me to pull this kind of raw data from the camera, without needing to go through the Mac OS/Windows Lytro Desktop software. If anyone has done this, I’d love to compare notes. Stay tuned.

I recall burning three or four weeks of a sabbatical getting Saccade.com on the air with Wordpress. So much tweaking…