Eldon, WA0UWH was inspired by my recent experiment with Tatsuo Kogawa’s micro transmitter, and decided to build his own. Unlike my rather crude (but surprisingly effective) lashup on some copper clad board, Eldon designed a tiny 0.5″x0.8″ board, etched it and used surface mount components to finish it. Very nifty, and totally dwarfed by the 9v battery that powers it. Check it out!

Monthly Archives: April 2011

Minor outages might occur…

I’m doing a bit of website management. This might result in some minor unavailability of my website: hang in there, I’ll be back.

Update: I appear to be up and running again on a new server. Go ahead and send me some send me an email if you note any troubles.

Book Review: Land of LISP, by Conrad Barski

I learned to program as a teenager back in the 1980s, starting as most of a generation of future computer professionals did. I had an Atari 400 at home, and learned to program using the most popular language of the day: BASIC. There were lots of magazines like COMPUTE! and Creative Computing that included program listings for simple games that you could type in and experiment. The interaction was very hands on and immediate.

Sometimes I feel like we’ve lost this kind of “hobbyist programming”. Because programming can be lucrative, we often concentrate on the saleability of our skills, rather than the fun of them. And while the computers are more powerful, they also are more complicated. Sometimes it feels like we’ve lost ground: that to actually be a hobbyist requires that you understand too much, and work too hard.

That’s a weird way to introduce a book review, but it’s that back story that compelled me to buy Conrad Barski’s Land of Lisp. The book is subtitled Learn to Program in LISP, One Game at a Time!, and it’s delightful. The book is chock-full of comics and cartoons, humorously illustrated and fun. But beneath the fun exterior, Barski is an avid LISP enthusiast with a mission: to convince you that using LISP will change the way you program and even the way you think about programming.

I’ve heard this kind of fanatical enthusiasm before. It’s not hard to find evangelists for nearly every programming language, but in my experience, most of the more rare or esoteric languages simply don’t seem to be able to convince you to go through the hassle of learning them. For instance, I found that none of the “real world” examples of Haskell programs in Real World Haskell were the kind of programs that I would write, or were programs that I already knew how to write in other languages, where Haskell’s power was simply not evident. We probably know how to write FORTRAN programs in nearly any language you like.

But I think that Land of Lisp succeeds where other books of this sort fail. It serves as an introductory tutorial to the Common LISP language by creating a series of retro-sounding games like the text adventure game “Wizard’s Adventure” and a web based game called “Dice of Doom”. But these games are actually not just warmed over rehashes of the kind of games that we experimented with 30 years ago (and grew bored of 29 years ago): they demonstrate interesting and powerful techniques that pull them significantly above those primitive games. You’ll learn about macros and higher-order programming. You’ll make a simple webserver. You’ll learn about domain-specific languages, and how they can be used to generate and parse XML and SVG.

In short, you’ll do some of the things that programmers of this decade want to do.

I find the book to be humorous and fun, but it doesn’t pander or treat you like an idiot. While it isn’t as strong academically as The Structure and Interpretation of Computer Programs (which is also excellent, read it!) it is a lot more fun, and at least touches on many of the same topics. I am not a huge fan of Common LISP (I prefer Scheme, which I find to be easier to understand), but Common LISP is a reasonable language, and does have good implementations for a wide variety of platforms. Much of what you learn about Common LISP can be transferred to other LISP variants like Scheme or Clojure.

But really what I like about this book is just the sense of fun that it brings back to programming. Programming after all should be fun. The examples are whimsical but not trivial, and can teach you interesting things about programming.

At the risk of scaring you off, I’ll provide the following link to their music video, which gives you a hint of what you are getting yourself into if you buy this book:

I’d rate this book 4/5. Worth having on your shelf!

Land of LISP, by Conrad Barski

Addendum: Reader “Angry Bob” has suggested that the language itself should be spelled not as an acronym, but as a word, “Lisp”. It certainly seems rather common to do so, but is it wrong to spell it all in caps? LISP actually is derived from LISt Processor (or alternatively Lots of Insufferable Superfluous Parentheses), just as FORTRAN is derived from FORmula TRANslator, so capitalizing it does seem reasonable to me. Wikipedia lists both. I suppose I should bow to convention and use the more conventional spelling “Lisp”, if for no other reason that Guy Steele’s Common Lisp the Language (available at the link in HTML and PDF format, a useful reference) spells it that way. Or maybe I’ll just continue my backward ways…

The Micro FM transmitter on copper clad (much better!)

Yesterday’s video showed a very fussy version of Tetsuo Kogawa’s 1 transistor FM transmitter, which worked after a fashion, but which seemed really squirrely. Almost any motion of anything caused the circuit to behave rather badly as capacitance changed, and I picked up a considerable amount of hum. Today, I rebuilt the circuit onto a piece of one sided copper clad PCB material, and it worked much better. Hardly any hum, and much less finicky. I didn’t even try to add a clip lead: what you see below is the circuit just operating with whatever signal radiates from the PCB.

I’m still getting multiple copies of the output across the FM broadcast dial, so I am not sure that it’s really that great of a circuit, and I’d be terrified of trying to amplify this and send it over a greater distance lest the FCC come hunting me down, but it at least works, and wasn’t very hard to debug, once I got the difference in pin layout for the 2N3904 sorted out and redid the layout a bit.

Check it out!

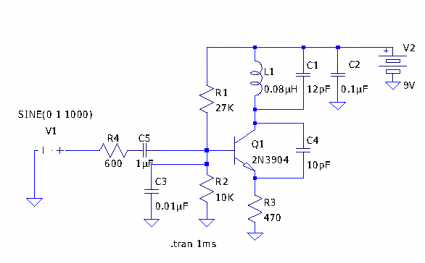

Schematic for the Micro FM transmitter

Tetsuo Kogawa’s circuit is pretty well documented, but not in conventional schematic form. I decided to enter it into LTSpice to see what it could make of it, and decided to go ahead and put the schematic online here, with perhaps a few comments:

I’ve set this up more or less as I built the circuit: in my circuit C1 is a small air variable cap that goes up to about 18pF, so I’ve set it to 12pF here. I use a 9V supply, so that’s what I put in for V2. I changed the power supply bypass cap to be 0.1uF instead of 0.01uF, since I have a bag of 0.1uF ones, and it doesn’t seem to affect the circuit. The L1 value of 0.08uH was determined by plugging numbers into the formula for an air wound solenoid coil: it’s probably only very roughly what the inductance actually is. Instead of the 2SC2001, I went ahead and put in the 2n3904 that I used. V1 is supposed to model the audio input, supplying a 1000 Hz, 1V amplitide sine wave to the modulation input. The output should be tapped from the emitter of Q1.

I’m going to experiment with the circuit a bit more: I’m particularly interested in jigging this up so I can figure out what the deviation is likely to be, and how it can be controlled, and how the biassing might change with a 6V supply.

Watermarking and titling with ffmpeg and other open source tools…

I’ve received two requests for information about my “video production pipeline”, such as it is. As you can tell by my videos, I am shooting with pretty ugly hardware, in a pretty ugly way, with minimal (read “no”) editing. But I did figure out a pretty nice way to add some watermarks and overlays to my videos using open source tools like ffmpeg, and thought that it might be worth documenting here (at least so I don’t forget how to do it myself).

First of all, I’m using an old Kodak Zi6 that I got for a good price off woot.com. It shoots at 1280x720p, which is a nominally a widescreen HD format. But since ultimately I am going to target YouTube, and because the video quality isn’t all that astounding anyway, I have chosen in all my most recent videos to target a 480 line format, which (assuming 16:9) aspect ratio means that I need tor resize my videos down to 854×480. The Zi6 saves in a Quicktime container format, using h264 video and AAC audio at 8Mbps and 128kbps respectively.

For general mucking around with video, I like to use my favorite Swiss army knife: ffmpeg. It reads and writes a ton of formats, and has a nifty set of features that help in titling. You can try installing it from whatever binary repository you like, but I usually find out that I need to rebuild it to include some option that the makers of the binary repository didn’t think to add. Luckily, it’s not really that hard to build: you can follow the instructions to get a current source tree, and then it’s simply a matter of building it with the needed options. If you run ffmpeg by itself, it will tell you what the configuration options it used for compilation. For my own compile, I used these options:

--enable-libvpx --enable-gpl --enable-nonfree --enable-libx264 --enable-libtheora --enable-libvorbis --enable-libfaac --enable-libfreetype --enable-frei0r --enable-libmp3lame

I enabled libvpx, libvorbis and libtheora for experimenting with webm and related codecs. I added libx264 and libfaac so I could do MPEG4, mp3lame so I could encode to mp3 format audio, most important for this example, libfreetype so it would build video filters that could overlay text onto my video. If you compile ffmpeg with these options, you should be compatible with what I am doing here.

It wouldn’t be hard to just type a quick command line to resize and re-encode the video, but I’m after something a bit more complicated here. My pipeline resizes, removes noise, does a fade in, and then adds text over the bottom of the screen that contains the video title, my contact information, and a Creative Commons banner so that people know how they can reuse the video. To do this, I need to make use of the libavfilter features of ffmpeg. Without further ado, here’s the command line I used for a recent video:

[sourcecode lang=”sh”]

#!/bin/sh

./ffmpeg -y -i ../Zi6_4370.MOV -sameq -aspect 16:9 -vf "scale=854:480, fade=in:0:30, hqdn3d [xxx]; color=0x33669955:854×64 [yyy] ; [xxx] [yyy] overlay=0:416, drawtext=textfile=hdr:fontfile=/usr/share/fonts/truetype/ttf-dejavu/DejaVuSerif-Bold.ttf:x=10:y=432:fontsize=16:fontcolor=white [xxx] ; movie=88×31.png [yyy]; [xxx] [yyy] overlay=W-w-10:H-h-16" microfm.mp4

[/sourcecode]

So, what does all this do? Well, walking through the command: -y says to go ahead and overwrite the output file. -i specifies the input file to be the raw footage that I transferred from my Zi6. I specified -sameq to keep the quality level the same for the output:: you might want to specify an audio and video bitrate separately here, but I figure retaining the original quality for upload to YouTube is a good thing. I am shooting 16:9, so I specify the aspect ratio with the next argument.

Then comes the real magic: the -vf argument specifies a rather long command string. You can think of it as a series of chains, separated by semicolons. Each command in the chain is separated by commas. Inputs and outputs are specified by names appearing inside square brackets. Read the rather terse and difficult documentation if you want to understand more, but it’s not too hard to walk through what the chains do. From ‘scale” to the first semicolon, the input video (implicit input to the filter chain) we resize the video to the desired output size, fade in from black over the first 30 frames, and then run the high quality 3d denoiser, storing the result in register xxx. The next command creates a semi-transparent background color card which is 64 pixels high and the full width of the video, storing it in y. The next command takes the resized video xxx, and the color card yyy, and overlays the color at the bottom. We could store that in a new register, but instead we simply chain on a drawtext command. It specifies an x, y, and fontfile, as well as a file “hdr” which contains the text that we want to insert. For this video, that file looks like:

The (too simple) Micro FM transmitter on a breadboard https://brainwagon.org | @brainwagon | mailto:brainwagon@gmail.com

The command then stores the output back in the register [xxx]. The next command reads a simple png file of the Creative Commons license and makes it available as a movie in register yyy. In this case, it’s just a simple .png, but you could use an animated file if you’d rather. The last command then takes xxx and yyy and overlays them so that the copyright appears in the right place.

And that’s it! To process my video, I just download from the camera to my linux box, change the title information in the file “hdr”, and then run the command. When it is done, I’m ready to upload the file to YouTube.

A couple of improvements I have yet to add to my usual pipeline: I don’t really like the edge of the transparent color block: it would be nicer to use a gradient. I couldn’t figure out how to synthesize one in ffmpeg, but it isn’t hard if you have something like the netpbm utilities:

[sourcecode lang=”sh”]

#!/bin/sh

pamgradient black black rgb:80/80/80 rgb:80/80/80 854 16 | pamtopnm > grad1.pgm

ppmmake rgb:80/80/80 854 48 | ppmtopgm > grad2.pgm

pnmcat -tb grad1.pgm grad2.pgm > alpha.pgm

ppmmake rgb:33/66/99 854 64 > color.ppm

pnmtopng -force -alpha alpha.pgm color.ppm > mix.png

[/sourcecode]

Running this command builds a file called ‘mix.png’, which you can read in using the movie node in the avfilter chain, just as I did for the Creative Commons logo. Here’s an example:

If I were a real genius, I’d merge this whole thing into a little python script that could also manage the uploading to YouTube.

If this is any help to you, or you have refinements to the basic idea that you’d like to share, be sure to add your comments or links below!

The (too simple) Micro FM transmitter on a breadboard

A couple of days ago, I mentioned Tetsuo Kogawa’s MicroFM transmitter, a simple one transistor FM radio transmitter. Tonight, I decided to put it together on an experimenter’s breadboard. I didn’t have the 2SC2001 transistor that Tetsuo Kogawa used, so I just dusted off one of my $.10 2N3904 transistors, and dug the rest of the components out of my junk box. I assembled it in the worst way imaginable, with no real attention to lead lengths (I left them all uncut) and fed with unshielded cable. It “worked”, after a fashion at least, but I counted four images of the transmitted signal up and down the FM broadcast band.

I suspect if I built this properly on some copper clad with short line lengths, it would work better, but I suspect that it still would be rather horrible on spectral purity. As such, it’s worth experimenting with, but I wouldn’t try to build something this simple and try to get range beyond my desktop.

Jesse Jackson Jr.: The iPad Is Killing American Jobs

I’m going to divert myself from my normally safe topics of conversations, and briefly wander into a matter of politics, because Representative Jesse Jackson Jr. has been getting a lot of press lately for his astonishingly stupid remarks about the iPad costing American jobs.

Jesse Jackson Jr.: The iPad Is Killing American Jobs.

Sigh.

First of all, blaming the demise of Border’s on the iPad is a bit like blaming the winning horse for making slower horses lose. Go to your favorite financial reporting site, and examine the stock prizes for Borders, for Barnes and Noble, and for Amazon. As recently as 2007, they were all running neck and neck in terms of performance: in fact, the traditional book sellers were outperforming Amazon through most of 2001-2003. But in 2007, Amazon exploded, nearly tripling in price, and despite the recession which caused a temporary dip, it has continued its remarkable growth. Amazon is now trading at nearly $200, while Barnes and Noble is limping along at $9 and Borders faces bankruptcy.

Why would this be the case? Well, it’s simply because Amazon is a better way to buy books.

For one thing, to many destinations, it’s cheaper. For reasons which escape me, Amazon doesn’t charge sales tax for orders shipped ot most destinations. Here in California, that means that list prices are often lower than you’ll find in brick and mortar stores, and often lower even if you take shipping into account. We can thank the Internet Tax Freedom Act for this, which says that stores which do not have a physical selling presence are not required to collect sales taxes. (According to Amazon’s page on the matter at least, I am still confused by the rationale.) Presumably Congress can address this potentially unfair issue with legislation, although state and local governments frequently make curious choices in decreasing the value of money in their jurisdictions by imposing additional taxes, putting themselves at a disadvantage when compared to other areas.

But beyond cheapness, Amazon also is simply a better place to buy books. In the first place, I can get very close to any book that I want, new or used. I have rather esoteric and technical tastes in books, and it’s not wrong to say that most stores (such as Borders) simply don’t have the books I’m interested in buying. But, if I go Amazon’s website, I can get anything which is print, and many used books (integration of new and used books in search is an awesome feature of Amazon). I recently got old out of print books on analog computing and computer architecture by doing searches on Amazon.com. B&N and Borders simply aren’t any help for those kind of searches. Yes, if you wander into a Borders, you can have them order a book for you, but presumably you don’t need their help to do that: if you know what you want, you can just order it yourself. And if you don’t know what you want, you can use their recommendation service to help find books that maybe you do want.

And of course lastly is that Amazon actually has an electronic book strategy. Amazon recognized the potential of electronic books early, invested and developed consumer products to fuel demand for ebooks, and now is reaping huge benefits. B&N is a step behind, but is at least trying to be competitive. Borders? They didn’t have a clue.

Amazon has recognized that it isn’t Borders who is their competition: it’s Apple. The iPad is an excellent ebook reader (and the Kindle app is the best of the ebook readers) so Amazon has to figure out a way to compete in that market without having to go directly toe-to-toe with Apple. And, they discovered a good way: the Kindle, a less expensive (if less capable reader) that has enough good features to make it a reasonable choice.

Okay, now, back to Jackson’s comments.

The iPad (and probably just as importantly, Amazon) probably did destroy American jobs in one sense: they provided an insanely great product that changed the way that people buy and consume books. That’s disruptive: it means that the thousands of people whose job it was to insert themselves between publishers and book buyers are simply lost. You probably don’t use a travel agent any more either, since services like Expedia and Travelocity exist. But the fact is that there really isn’t any practical reason for you to not just download your books or have them shipped directly from a central warehouse, and there are many consumer benefits to doing so.

We are facing an employment crisis: the skills that many of us have are simply not that valuable, especially in the sales arena. We can lament the loss of jobs (and it is a serious problem) but we shouldn’t misunderstand what is going on here: technology is creating new ways for manufacturers to sell more directly to consumers. It’s just more efficient. As consumers, we’ll enjoy reduced prices, right up until the point where our own jobs are lost, and we can’t afford to be consumers any longer.

If you thought about this a little bit harder than Jackson did, you might be comforted by the idea that there are winners and losers, playing in some kind of zero sum game. But don’t get too happy: the winners are very few in comparison to the number of losers. Besides widening the gaps between rich and poor (which I’d submit isn’t good) it also has certain risks. As wealth becomes concentrated, the power of the few wealthy is increased. With increases in power comes increased potential for abuse and, well, insanity.

Blaming winners is silly. If the game is unfair, change the rules. If it’s no fun for the majority of participants, change the game. Provide value to participating in society, rather than exploiting it.

How to build the simplest transmitter?

In digging around for small AM radio schematics (I’m more interested in AM than FM), I ran across Tetsuo Kogawa’s site on building the “simplest” FM transmitter. It’s actually pretty cute, and has just a single transistor, a few resistors and caps, and a coil that you can wind yourself on the threads of a bolt. It’s also pretty easy to assemble “ugly” style, on a piece of copper clad board. Check it out.

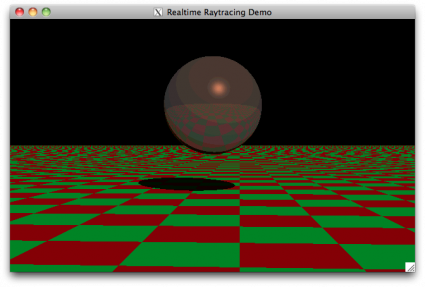

Code from the Past: a tiny real time raytracer from 2000

Back in 2000, I was intrigued by the various demos that I saw which attempted to implement real time raytracing. I wondered just what could be done with the computers I had on hand, without using any real tricks, but just a straightforward implementation of ray/sphere and ray/patch intersection. As I recall, I got two or three frames a second back then. I dusted off this code, and recompiled it for my MacBook (it uses OpenGL/GLUT, so it should be reasonably portable) and now it actually runs pretty reasonably. The code isn’t completely horrific, and might be of some use to someone as an example. It’s not clever, just straightforward and relatively easy to understand.

RTRT3, a tiny realtime raytracer

Addendum: I recompiled this on some of our spiffy machines at work, and immediately encountered a bug: there is nothing really to prevent infinite recursion. It’s possible (especially when the sphere and plane are touching) for a ray to get “stuck” between the sphere and plane. The simplest cure is to just keep track of how deep the recursion is by passing a “level” variable down through the intersect routines, and return FALSE for any intersection which is “too deep” (say, beyond six reflections deep). When modified thusly, I get about 96 FPS on my current HP desktop machine, running on one core. It has 12, incidently.

Cool Hack O’ Day: real pixel coding

The problem with working some place with lots of intelligent people is that it is increasingly hard to maintain one’s sense of superiority. Today, I tip my hat to Inigo. He has a very cool demo here, where he creates a program by creating and editing a small image in photoshop, saving it as a raw image file, and then renaming it to a .COM file and running it. It’s a testament to how clever, sneaky, and small programs can be.

Thanks to Jeri reposting this link on twitter, I’m getting lots of hits. Thanks Jeri. But be sure to visit Inigo’s original post to read more about this cool hack:

el trastero » Blog Archive » real pixel coding.

Sony drops lawsuit against Geohot, but not really…

I was directed to a posting about the lawsuit between Geohot and Sony by a twitter from @adafruit.

Sony drops lawsuit against Geohot – a maker, hacker and innovator… « adafruit industries blog.

I think the article’s title is rather misleading. Sony didn’t drop the lawsuit: the lawsuit was settled. While Geohot admitted to no wrongdoing, he agreed to a permanent injunction barring him from distributing the information and code that he developed for the PS3. I don’t actually view this as a victory for hackers: Sony knew full well that once this information was published, the cat was already out of the bag. They also likely knew that any monetary damages they could have conceivably extracted wouldn’t pay for the costs of actually fighting the case. The only thing that they could really hope for was to use the threat of legal action so silence Geohot and any other PS3 hackers from further dissemination of their works.

Which is precisely what they did, and precisely what they got. The message from Sony wasn’t that “if you hack our system and publish the results, we realize that’s within your rights as an owner of Sony equipment”, it was “you can expect us to have our lawyers call you, and threaten you with a lawsuit that could cost you your life’s savings unless you capitulate.”

I don’t view this as a huge victory for owners of Sony products.

Announcing the “Soldering is Easy” Complete Comic Book!

Like many mechanical skills, soldering may seem fairly daunting if you’ve never done it before, but it’s really not that hard. If you need a basic getting-started guide, you could try out the new Soldering is Easy comic book. I think the only thing it really could use is a better guide to buying a soldering iron (lots of beginners buy the cheapest ones they can from Radio Shack, which is a guaranteed road to burned fingers and frustration). Check it out.

MightyOhm » Blog Archive » Announcing the “Soldering is Easy” Complete Comic Book!.

Cool Link on Maze Generation, with Minecraft Application

Josh read my earlier article on maze generation, and forwarded me to this cool link via Twitter. It’s an article by Jamis Buck, and details all sorts of cool ways to generate mazes, with examples, applets, discussion… It’s simply great. It even includes an online maze generator for constructing random mazes suitable for construction in Minecraft. Worth checking out:

Update: Was Hank Aaron really that good?

Back in 2007, I was looking at the career total bases expressed as miles, mainly to demonstrate what an outstanding career Hank Aaron had.

brainwagon » Blog Archive » Was Hank Aaron really that good?

I expressed with certainty that Bonds would never catch (and indeed, nobody may ever catch) Aaron’s numbers for total bases. In his career, Aaron had 6856 total bases. If you multiply that by 90 feet, you get that Aaron ran 116.86 miles in his career.

Bonds finished with 5976 bases, for a total of 101.86 miles. That places him just above Ty Cobb (99.78 miles) but below Aaron, Musial and Mays.

Among active players, only Alex Rodriguez seems remotely possible, but he’s already in his 18th season, and has 5057 total bases (as of today). He’ll probably catch Griffey (5271 in 22 seasons) by the end of the year, but it would take about six or eight more years of playing at his current level to reach Aaron.