I recall burning three or four weeks of a sabbatical getting Saccade.com on the air with Wordpress. So much tweaking…

Drawing “circles” ala Marvin Minsky…

In my re-reading of Levy’s book Hackers, I was reminded of an interesting bit of programming lore regarding an early display hack that Marvin Minsky did for circle drawing. It’s an interesting hack because the lore was that it was originally coded by mistake, and yet the result proved to be both interesting and even useful. I first learned of this years ago when Blinn did an article on different ways to draw a circle, but also learned that it was part of the MIT AI memo called “HAKMEM”. Here’s the basic idea:

One way that you can draw a circle is to take some point x, y on the circle, and then generate new points by rotating them around the center (let’s say that’s the origin for ease) and connecting them with lines. If you have a basic understanding of matrix math, it looks like this:

/ x' \ / cos theta sin theta \ / x \ | | = | | | | \ y' / \ - sin theta cos theta / \ y /

(I should learn how to use MathML, but you hopefully get the idea). The matrix with the cosine and sine terms in it is a rotation matrix which rotates a point around by the angle theta. Apparently Minsky tried to simplify this by noting that cos(theta) is very nearly one for small theta, and that sin(theta) can be approximated by theta (we can get this by truncating the Taylor series for both). Therefore, he thought that (I’m guessing here, but it seems logical) that we could simplify this as:

/ x' \ / 1 eps \ / x \ | | = | | | | \ y' / \ -eps 1 / \ y /

Okay, it’s pretty obvious that this seems like a bad idea. For one thing, the “rotation” matrix isn’t a pure rotation. It’s determinant is 1 – eps^2 1 + eps^2, which means that points which are mapped move slowly toward away from the origin. Nevertheless, Minsky apparently thought that it might be interesting, so he went ahead an implemented the program. I’ll express it here is pseudo-code:

newx = oldx - eps * oldy newy = oldy + eps * newx

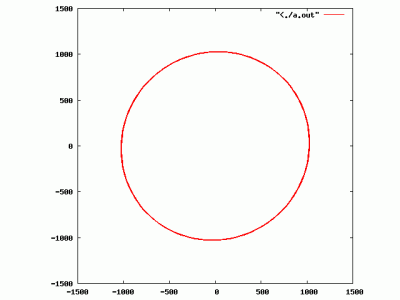

Note: this program is “buggy”. The second line should (for some definition of should) read “oldy + eps * oldx“, but Minsky got it wrong. Interestingly though, this “bug” has an interesting side effect: it draws circles! If eps is a power of 2, you can even implement the entire thing in integer arithmetic, and you don’t need square roots or floating point or anything. Here’s an example of it drawing a circle of radius 1024:

Well, there is a catch: it doesn’t really draw circles. It draws ellipses. But it’s still a very interesting bit of code. The first thing to note is that if you actually write down the transformation matrix that the “wrong” equations implement, the determinant is one. That helps explain why the point doesn’t spiral in. But there is another odd thing: if you implement this in integer based arithmetic, it’s eventually periodic (it doesn’t go out of control, all the round off errors eventually cancel out, and you return to the same point again and again and again). In HAKMEM, Schroeppel proclaims that the reason for the algorithm’s stability was unclear. He notes that there are a finite number of distinct radii, which indicates that perhaps the iterative process will always eventually fill in the “band” of valid radii, but the details of that seem unclear to me as well. I’ll have to ponder it some more.

Addendum: The real reason I was looking at this was because the chapter in Hackers which talks about Spacewar! also talks about the Minskytron whose official name was TRI-POS. It apparently uses some of this idea to draw curves in an interesting way. HAKMEM item #153 also suggests an interesting display hack:

ITEM 153 (Minsky):

To portray a 3-dimensional solid on a 2-dimensional display, we can use a single circle algorithm to compute orbits for the corners to follow. The (positive or negative) radius of each orbit is determined by the distance (forward or backward) from some origin to that corner. The solid will appear to wobble rigidly about the origin, instead of simply rotating.

Might be interesting to implement.

Addendum2: I goofed up the determinant above, it should be 1 + eps^2, not 1-eps^2. Corrected.

Comments

Comment from Steve VanDevender

Time 8/9/2010 at 6:45 pm

Wait, the ideal matrix is actually:

| newx | = | sqrt(1-eps^2) -eps | | oldx |

| newy | | eps sqrt(1-eps^2) | | oldy |

since really cos(a) = sqrt(1 – sin^2(a)).

Comment from Elwood Downey

Time 8/10/2010 at 9:35 am

I was wondering how to know when to stop. After a little experimenting I found that if eps = 1/N, then the circle is complete after 2*pi*N iterations. Any idea why this seems to work?

Comment from Mark VandeWettering

Time 8/10/2010 at 11:27 am

Yeah, I have some idea. As I mentioned above, eps is basically the approximation of the angle of rotation in radians. It takes 2 * pi / eps rotation to make a full circle.

Comment from Mark VandeWettering

Time 8/10/2010 at 11:28 am

One more thing: when it completes a full revolution, it may not actually return precisely to the same point. It can wind around several times before eventually returning to its starting place. I’m still pondering on the “proof” of eventual periodicity.

Comment from Steve VanDevender

Time 8/10/2010 at 10:40 pm

What I’ve been pondering is if there is a way to decompose the Minsky transformation

M = | 1 -eps |

| eps 1-eps^2 |

into a product of the form

M = Q * R * Q^-1

Where R is an ideal rotation, and Q might be something like a shear (and A^-1 is the matrix inverse of A). That might more clearly show why M is so much like a rotation and why it really traces out an ellipse. Unfortunately my matrix algebra is a little rusty towards coming up with the solution to that one.

Also, there may be some application towards a proof of periodicity in working out the diagonalization of the Minsky matrix

M = V * D * V^-1

Where D is a purely diagonal matrix, since then M^n = (V * D * V^-1)^n = V * D^n * V^-1, and D^n is easy to compute because its diagonal elements are just D[i][i]^n. If repeatedly appying M to a vector shows periodic behavior, then for some n M^n has to be close to (or equal to) an identity matrix, and the decomposition might indicate why.

Comment from Steve VanDevender

Time 8/12/2010 at 12:13 am

In fact, the diagonalization of the Minsky matrix makes it pretty clear that repeatedly transforming a vector with M has to produce periodic behavior.

M = | 1 -s |

| s 1-s^2 |

If we diagonalize M into the form V * D * V^-1, where D has nonzero elements only along its diagonal, then the diagonal elements of D will be the eigenvalues of M. To determine those diagonal elements we solve det(M – k*I) = 0 for k to get

k = (1 – s^2/2) + i * s * sqrt(1 – s^2/4)

The interesting thing about this is that for values of s we would normally be interested in (0 < s < 2) k is a complex number. |k| (its magnitude) is also 1 under those conditions. The second eigenvalue (using the negative square root) is the complex conjugate of k or k*. (The complex eigenvalues also indicate that, geometrically, M is much like a pure rotation in that there are no vectors that are just scaled by M and not rotated.) Any power of k or k* will also be a unit complex number and there must be some power n for which k^n = 1 and k*^n = 1.

This is great news. M^n = (V * D * V^-1)^n = V * D^n * V^-1, and we're interested in finding n such that M^n = I, which is satisfied if we can find D^n = I. We don't even need to know what V is in that case. The values of n for which k^n = 1 and k*^n = 1 (and hence D^n = I) are those n = m * 2 * pi / arg(k) (for any integer m, and arg(k) being the angle that k makes with the real axis, atan((s * sqrt(1 – s^2/4) / (1 – s^2 / 2))).

So at least in the real plane iterated transformation of a vector by M has to show periodic behavior for 0 < s < 2, although in general the period n won't be an integer. Even if n isn't an integer, integer powers M^p will stay in the periodic orbit.

This doesn't necessarily prove that M has to be periodic when using fixed-point arithmetic, although it strongly implies that if round-off error doesn't result in det(M) != 1, the behavior will also be periodic.

Comment from Kragen Javier Sitaker

Time 8/1/2015 at 7:02 pm

With fixed-point (or generally non-arbitrary-precision) arithmetic, this algorithm is a deterministic finite-state automaton with no input and, say, 16 bits of state in X and 16 bits of state in Y. Any DFA with no input has eventually-periodic behavior, perhaps with a degenerate period-1 single-state loop, but it doesn’t necessarily follow that its initial state is part of whatever periodic attractor it ends up in; it could be a sequence like A, B, C, B, (repeat). But for this to be true, some state must have more than one predecessor — in this example, B is preceded by both A and C. This means that the DFA’s transition function does not have a deterministic inverse: if you construct a time-reversed version of it, it’s nondeterministic. If the transition function is reversible (information-preserving), then you can run the DFA backward to the initial state (and beyond!) from any eventual successor state.

But the state transition function implemented by the HAKMEM-149 algorithm is reversible, as noted in HAKMEM-150, because each of the two steps in the loop, “x -= ?·y” and “y += ?·x”, are individually invertible — in fixed-point arithmetic, anyway. (In floating point, maybe you lose low-precision bits if the change results in floating the point one binary place to the left, so this doesn’t apply. And of course in “modern C” signed overflow is undefined, because to compiler vendors, the correctness of your code is less important than fractional percentage point performance increases.)

Therefore, with fixed-point arithmetic with the traditional overflow semantics, every possible initial state is a point on its own attractor — we eventually return to the original point, QED.

(However, as so vividly illustrated in “Minskys and Trinskys”, the periodic behavior from roundoff error and overflow need not look anything like a circle.)

In floating-point, the algorithm is not necessarily reversible, but the above argument demonstrates that it is still necessarily periodic — although conceivably with periods of up to 2?? or 2¹²?. In practice, it converges to periodicity very rapidly.

Comment from Kragen Javier Sitaker

Time 8/1/2015 at 7:04 pm

Your blog software has converted my superscript digits 6, 4, and 8 and my epsilon into question marks. It should be spanked with a newspaper. I hope later readers can decrypt the meaning of my comment.

Comment from Steve VanDevender

Time 8/9/2010 at 6:33 pm

The interesting thing to me, after briefly fiddling with the math of this, is how close Minsky’s mistake comes to creating the ideal transformation matrix. If you expand out the “mistaken” use of newx in his transform, you get this transformation matrix:

| newx | = | 1 -eps | | oldx |

| newy | = | eps 1-eps^2 | | oldy |

(yeah, I don’t know how to use MathML either). If we say eps = sin(a), then cos(a) = 1 -sin^2(a) = 1 – eps^2, so the ideal matrix is:

| newx | = | 1-eps^2 -eps | | oldx |

| newy | = | eps 1-eps^2 | | oldy |

Yow! Amazingly close, especially for small eps. If we attempt to decompose Minsky’s slightly off tranformation into a product of a pure rotation and, umm, something else, the something else will also have to have a determinant of 1 implyiing it’s something relatively simple like an area-preserving shear.