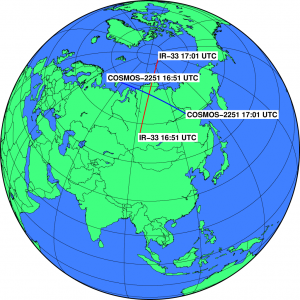

It was reported that an Iridium satellite and an “non-functional Russian satellite” collided yesterday. I was curious, so I did a bit of digging, and found out that NASA had reported that it was Iridium 33 and COSMOS-2251. A bit more work uncovered orbital elements for both objects, so I was able to plug in their numbers and determine the location of the collision. A bit more of scripting, and I had GMT generate the following map (click to zoom in some more):

According to my calculations, they passed within 100 meters of one another (but my code gives an uncertainty much greater than that.) Each satellite is travelling about 26,900 km/second hour (sorry for the typo, but the math holds). I don’t have the mass numbers for the satellite, but even if you think they are travelling at perfect right angles, each kilogram of the mass generates about 28M joules of energy. According to this page on bird strikes, a major league fastball is about 112 joules, a rifle bullet is about 5,000 joules, and a hand grenade is about 600,000 joules. This collision generated 28M joules per kilogram of mass. Ouch!

Addendum: It’s been a long time since I took basic physics. If you care, you shouldn’t trust my math, you should do it yourself and send me corrections. 🙂

Today is 3/14, also known as Pi Day. Wish a “Happy Pi Day!” to your coworkers, and be forever branded as a math geek.

Today is 3/14, also known as Pi Day. Wish a “Happy Pi Day!” to your coworkers, and be forever branded as a math geek.