A (not entirely simple) LCD display for the Arduino…

I am a big fan of Bill Meara N2CQR and Pete Juliano N6QW, hosts of the really great Soldersmoke Podcast. Together, they chat about homebrewing ham radio equipment, and what they’ve learned in their lessons along the way. Their “tribal knowledge” is of terrific help to someone like me who keeps making small forays into the world of homebrew.

Warning: this post may be written at a level either below or above any readers experience, and either might find it boring. You’ve been warned. Additional warning: I probably made this more complicated than it should have been. Skip to the bottom to find the resolution.

During Soldersmoke 175, they expressed some disgruntlement with what I call “the Arduino Tower of Babel”. Despite the reputation of the Arduino being the easiest way to get into using microcontrollers in your own homebrew electronics project, it can be really daunting and fraught with frustration and peril. In particular, they seemed to be having problems with trying to get various “sketches” to compile and run properly, depending on what version of the Arduino IDE they were running. Via e-mail, I offered to try to help out, perhaps being as the Arduino Sherpa that could guide them to success. While I know there are lots of people out there who are more skilled, knowledgeable and experienced than I, I have enough general computer experience to often be able to sort out this kind of problem. I thought that instead of writing this all down as an email to them both, this might serve as a good bit of knowledge of general interest to those just getting started in using the Arduino and/or programming. A lot of this won’t come as much of a surprise to practitioners of the digital arts, but perhaps it might be of some use to someone (and hopefully Bill and Pete, although it appears that Bill has at least made some headway).

First of all, the way most people interact with the Arduino is through the Interactive Development Environment, commonly referred to as the IDE. It is a pretty simple looking program (well, as such things go, it can be daunting for beginners) which is actually a wrapper around several different components. What the user typically sees is a window where he can enter “sketches” (what most people would call “programs”), and a series of buttons that will allow you to load, save, compile and download code to the target Arduino board that is connected via the USB port.

Like most software that is popular, it’s being constantly revised: new versions are being created all the time. Because it is what is called an “open source” project, it isn’t a single company that is responsible for changes, it evolves by the contribution of many different contributors. Each “release” of the code is tagged with a version number. As of this date, the latest version of the Arduino that you can download from the primary site is 1.6.3. Because people hate to upgrade software though, many people are using older versions of the software, with some common versions being 1.0.5.

I mentioned that the Arduino IDE consisted of many different components: among these are a set of standardized “libraries” that encapsulate common functionality that lots of people find useful. When you use the Serial or Wire libraries, you are actually using code that is shipped with the Arduino IDE. These “standard” libraries usually work well right out of the box, and don’t change all that often. Thus, if you had a sketch which uses those libraries, or even more basic calls like digitalRead or digitalWrite, you probably won’t notice a lot of differences.

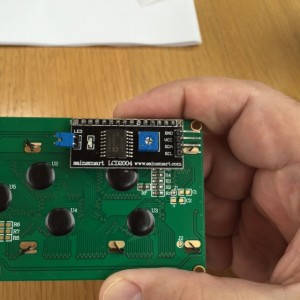

But some code is not part of the standard distribution. For instance, Pete was having difficulty getting an LCD display unit working properly with different versions of the Arduino IDE. I thought it might be a fun excuse to pick up an LCD panel to play with, so I asked him which one he used, and he emailed back this link, which seemed like a cool device. A mouse click, and two days via Amazon Prime, and I had one in my hand.

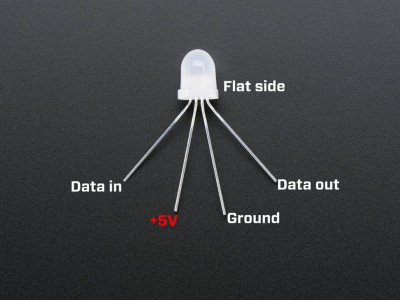

A pretty nice little unit, a little bigger than I expected. If you look at the back, you’ll see that it’s got a little board that looks a bit out of place connected to the back. It’s made by the company Sainsmart, and has four simple pins connected to the back. That board is an converter which turns the display board (which are fairly common, but require a lot more connections) to instead use what is called the “I2C bus”. To get this display working requires a lot less wiring: just +5v and ground, and then two data pins, called SDA and SCL (the “serial data” and “serial clock”, respectively). This makes hardware hookup a lot easier than the conventional (and somewhat cheaper) models.

If you had an ordinary LCD without this backpack, you could use the standard “LiquidCrystal” library that ships with the Arduino IDE (documented here.) If you read the manual page for the “constructor” (the statement which creates a “LiquidCrystal” object that you can interact with), you can see that there are a bunch of ways to create one, depending on how you wire it up. These standard LCD panels require somewhere between six and eleven connections (plus power and ground) which can be a headache.

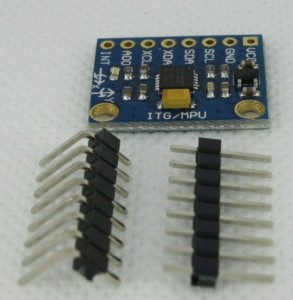

By contrast, this panel requires only two lines. Awesome! What’s even more awesome is that you can attach other devices that use the I2C bus to the same two lines. Each peripheral has a unique “address”, so programs can talk to each device independently, without adding any more wiring.

But there is a seemingly small problem, one that is familiar to users of desktop computers. To use these special devices, you need a custom library (think of a custom Windows device driver) that knows how to talk to this device. And here begins the problems that Pete and Bill had.

The libraries that ship with the official IDE are usually pretty well thought out, checked to make sure that they work well with the IDE, and are compatible. But this requires a custom library, and those libraries are not always tested against all versions of the IDE. Sometimes they work. Sometimes, not so much.

And, what’s worse is that this code isn’t versioned or vetted. You can have different versions of the code with the same library name. It’s hard to know what versions are the best, which are later revisions and which are earlier, and which were created or modified by, shall we say, less good programmers?

Okay, back to our LCD display.

I did what I always do, I googled and found this this version of something called LiquidCrystal_I2C as the top response. That seemed promising. Version 2.0! I downloaded it and installed it (by the way, installing libraries can be annoying in the Arduino IDE, maybe I will rant about that some other day, but you can find out the “right” way to do it here), opened their “Hello World” program, compiled and downloaded and…

Nothing.

Screen glitched a little, and rows of black squares. Argh.

Double checked the wiring. Nothing seemed to be wrong. Hmmm.

Deleted that version of the library, and after some judicious surfing, uncovered this driver on the dfrobot website. They make a board which looks an awful lot like the sainsmart board. I thought I’d give it a whirl, even though it’s version 1.1 (and therefore presumably older).

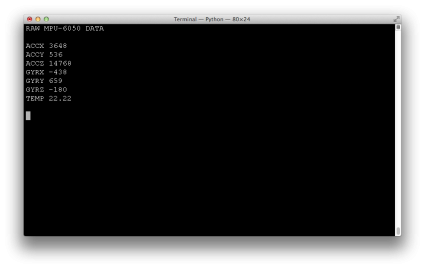

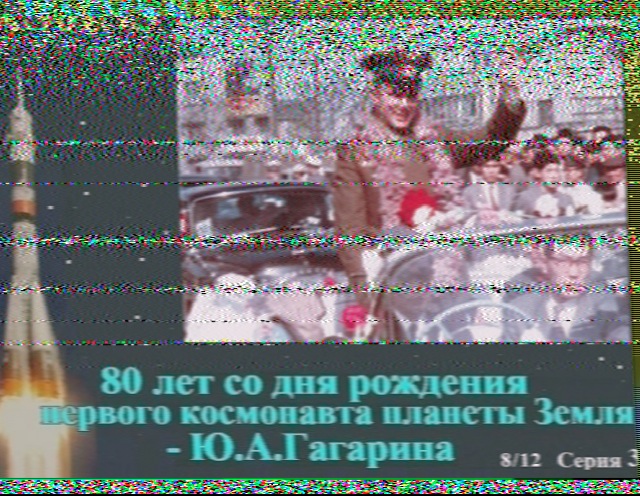

Results:

It’s at times like this that I feel waves of, well, if not rage then annoyance. I haven’t had the chance to figure out what the issue is (I’m an hour into this already, and it’s supper time) and have no doubt that I’ll be able to figure out what’s going on, but it’s annoying to beginners and experts alike that we have to do this kind of spelunking. Until I sort out this issue, I’ll just make a few recommendations:

- If you can use hardware supported by the standard libraries that ship with the Arduino, it’s probably worth doing.

- If you can’t (or choose not to) then perhaps do some searches to find what other people are doing to get stuff working.

- Document your success and failures as best you can on the web somewhere. Be specific as to which version and platform (Windows, Mac, Linux) you are using.

- I actually recommend using the latest version of the IDE that’s available at arduino.cc, the official website. The older versions may “work just fine”, but you aren’t going to be able to take advantage of the many bug fixes and updates, and if you are interacting with other newbies, your code may not work. Best to get your own house in order, and then throw stones at whoever has code which doesn’t work properly with the latest official IDE.

To Bill and Pete: I feel your pain. I’d expect a device as common as this to work more or less out of the box. I’ll see if I can make a better suggestion soon. You might try using the code that worked for me, but that’s a poor solution really: I’m recommending using a modern IDE with old code. I’ll work on coming up with a better solution.

Addendum: Pete, in your email you indicated that the I2C address for your board was 0x3F. On mine, it actually turned out to be 0x27. I found this out by using an “I2C Bus Scanner”, a little sketch that runs on the Arduino and tries to find any devices by running through all 128 addresses. I was shocked to find that it’s not part of the standard examples, but if you google for “arduino i2c bus scanner” you can find code for many simple examples. It should be noted that this one I found screwed me up for a few minutes by printing the address in decimal, rather than hex.

Addendum2: Sigh. I may have been working too hard. Looking at the “official” distribution, it appears that the library does support I2C displays, although as near as I can tell, it’s completely undocumented, and none of the examples will work out of the box. I’ll figure out the right juju to get it to work soon, and will post it below.

Addendum3: In the words of Bill and Pete:

BASTA!!!!!!

Giving up for a moment, it’s acting stupidly on one of my dev machines. Hopefully what I said was not entirely wrong above, but it might be.

Addendum4: I was confused, but the rabbit hole keeps getting deeper. The version installed with 1.6.3 does not support I2C LCD displays. I was misled by looking at my installation on my Mac, which is not the standard 1.6.3, but is based on a system called platformio. When it installs code for the Arduino, it installs this version of the LCD library. You can download the code for it here. It supports both the traditional 8 and 4 bit parallel interfaces, as well as the I2C based version, and seems to be well documented. One bummer: it’s thought of as a direct drop in replacement for the standard system library, so you basically have to delete the installed LiquidCrystal library, and replace it with this one. Read the instructions here. It all looks good, except for one thing:

It doesn’t seem to work properly with the Sainsmart interface either.

BASTA!!!!!!

I know that part of this is that there are dozens of clones and near clones out there, and it’s hard for the library writers to know about all of them, but this is genuinely crazy.

Addendum5: Wow, this is totally crazy. If you go to the Sainsmart page for the “LCD2004”, you’ll find a rar file which includes the library to access this hardware. Except of course, that library is years old and will only work with versions 1.0 of the Arduino IDE.

BASTA!!!!!!

That’s it Bill and Pete. I’m giving up on digital electronics, and am going to spend the rest of the evening looking for good deals on dual gate FETs and crystals for filters.

I recall burning three or four weeks of a sabbatical getting Saccade.com on the air with Wordpress. So much tweaking…